Age Verification is Coming for Chatbots

I’m now more convinced than at any previous point that governments will start regulating generative AI via limiting access to tools like ChatGPT based on a user’s age, and I’m not sure that’s a good thing. Outside of AI, we’re increasingly seeing state and national governments move to implement strict age verification to limit the access of minors to various online sites. This includes not only adult content, but also social media. With several recent stories about teens harming themselves based on chatbot interactions, it feels like it is only a matter of time before some level of age-restricted access arrives for generative AI.

I know this may sound like a hot take to quite a few people. I was even debating the topic with Ted Underwood on Bluesky several weeks ago. I’d link to our conversation, but the thing is, I’d now need to log into a VPN to access it, as Bluesky is no longer available in my state of Mississippi. Several years ago, a young man was bullied horribly on social media through a sextortion scheme and ended up tragically taking his own life. As a result, the state of Mississippi passed an age verification law designed to protect minors that compels each social media company to enact age verification or face the prospect of stiff fines for each offense. Bluesky couldn’t afford either, so they pulled out of the state entirely.

Social media isn’t the only industry that faces a growing patchwork of state laws limiting access because of age verification. The adult entertainment industry likewise has to contend with enacting age verification in nearly two dozen states. Many sites, including massive ones like Pornhub, claim they cannot afford to enact age verification on their platforms, so, like Bluesky, they too simply blocked access to many states.

From Limiting Access to Removing Access: The Unintended Consequences of Age Verification

No one quite understood the emerging impact child safety laws would have on digital access across the web. These laws enjoy fairly broad bipartisan support worldwide, but age verification requirements are increasingly problematic and create a chain reaction effect that limits equitable access to digital services. Here are the two main issues:

Age verification is a very expensive thing to implement and carries massive penalties each time it fails. Often, smaller websites cannot afford to set up their own age verification system, so they have to contract out to a growing industry of ID verification services that come with shady promises about what they will do with the data they collect. According to one source, implementing age verification might cost companies $20,000 to $80,000 or more. Then there’s the upkeep and data retention and privacy costs. In addition to checking things like a driver’s license or other forms of government ID, some of these services include multi-factor verification through facial scanning or other biometric scanning techniques. Which brings me to my second point.

Not many people are eager to share an ID or have their face scanned in order to access a service. It doesn’t matter if it is adult content or social media. Traffic to certain sites drops nearly 80% once age verification is in place because no one feels comfortable handing over their private information. Worse, it appears to reward noncompliant sites by shifting user traffic to them, undermining the core purpose of the law.

How Age Verification Will Come to AI

In the past year, OpenAI saw a phenomenal rise in users seeking therapy and companionship from ChatGPT. Data from both the US and UK confirm that a growing number of teens and vulnerable children are using the technology in ways that might be antisocial or otherwise detrimental to their mental health. That’s a real concern that requires a societal response that goes beyond simply limiting access to AI features. There’s nothing about age verification that offers a full proof method of keeping kids off of certain sites. The internet is a porous space that doesn’t respect physical boundaries or geographic borders. A simple VPN service can easily let anyone, including teens, access sites by logging into a region without age verification.

Obviously we should do all that we can to protect children and other vulnerable populations in physical and digital spaces, but in our attempts to do so, we should be mindful of the impact these efforts have on broader society. Very few experts believe these laws will actually protect children. I think we should focus less on banning access to sites and instead focus on advocating for oversight, transparency, and working to educate parents and teens about potential harms. I spoke about this in a recent podcast episode for NPR: Teens are using AI. Here’s how parents can talk about it.

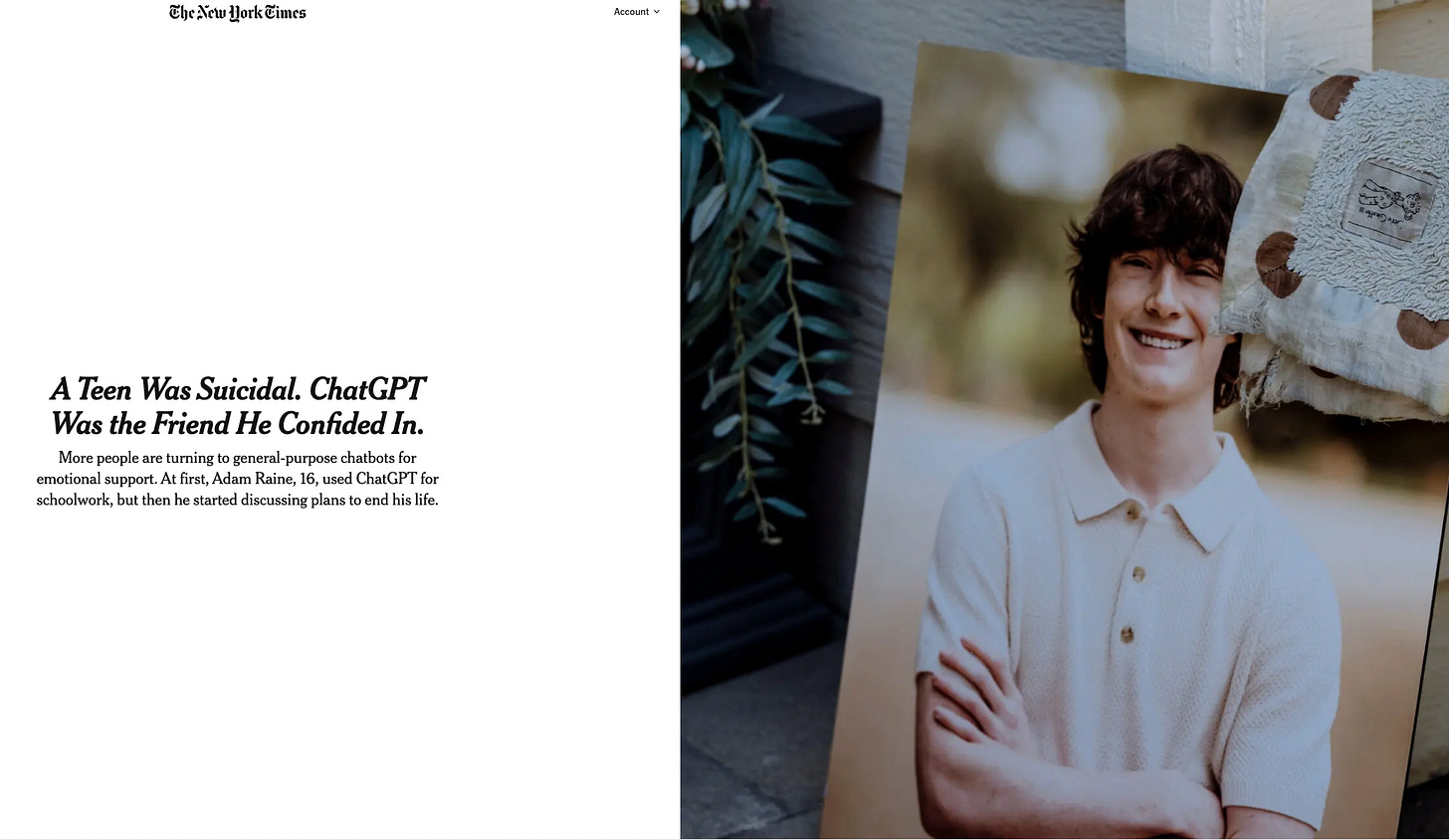

The risks of not having engaged dialogue with children are very real. The story below contains mention of suicide, so please be cautious. I’ve included it because it will likely be the tipping point for many people and cause age verification to be implemented in AI systems.

Recently, a 16-year-old boy committed suicide. His parents allege in a lawsuit that his interactions with ChatGPT contributed to his death. The family of Adam Raine claims in a lawsuit against OpenAI that the young man spent hours each day on ChatGPT discussing ways he could kill himself. Raine even shared images with ChatGPT of rope burns on his neck from an attempted suicide. He did eventually kill himself and was able to gain instructions on how to do it through conversations with ChatGPT because he jailbroke the model using simple and clever tactics to bypass the built-in safeguards. The hack? He made ChatGPT think he was writing a story about suicide. The billions invested in safety and alignment research weren’t able to stop a 16-year-old boy from convincing ChatGPT that his discussions about suicide were for a fictional short story he was writing.

OpenAI wrote a lengthy public statement saying what they’re doing to address the issue. But much like their previous foray into amplifying sycophancy in their bot’s interaction with users, making an AI system safe for nearly 800 million users isn’t really possible.

We’ve seen horrible stories about previous tragedies with services like Character.ai, and I’m sure it won’t be long for state legislative bodies to make the connection that even generic chatbots may pose certain dangers for teens. I think it is incredibly likely that we’ll see certain states move to restrict access by implementing age verification soon. Companies like YouTube have already started using machine learning along with other AI features to predict a users age.

The AI Bubble

OpenAI, Meta, Google, Microsoft, and even xAI won’t have any issues implementing age verification; however, the thousands of smaller companies that use API access to give users access to AI models in countless wrapper apps will likely find themselves in the same position Bluesky did in the state of Mississippi. The prospect of facing fines, paying for increasingly expensive age verification services that lead to fewer users, or pull out of states that enact such laws will mean that many AI companies will face extinction. As absurd as it sounds, age verification may be the tipping point that causes the AI bubble to burst.

The magnitude of age verification coming to AI might seem like a stretch to some, but I’d encourage you to consider just how much of the economy is now being supported by venture capital flowing into AI deployments and what the sudden loss of that will mean. Many ardent anti-AI folks will cheer. So too will child safety advocates. But innovation and equity minded people will despair. Because even if the bubble does burst and 90% of the AI companies fold, what remains will be a handful of companies at the top. They’ll be able to dominate the marketplace even more than they do now. Startups can barely compete with major corporations as it is and will have to ask in their funding rounds if they can afford to implement age verification or go dark across a significant number of states and even certain countries.

It’s obvious this is a less-than-ideal situation, but it is hard to feel sympathy for an industry that so recklessly deployed a technology on the general public as a grand experiment. Age verification may be the answer to how far the public will stomach unregulated machine intelligence that cannot tell the difference between when a child is writing a story or planning to harm themselves. Like so many things in our world, the consequences of these choices aren’t going to be felt evenly, and the solution isn’t likely to impact child safety in meaningful ways. I think most of us can agree that dealing with complex patchwork where your zip code determines your access to technology isn’t an ideal future for anyone.

One interesting element is that AI chatbot toys are being marketed to very young children (as young as 3 (e.g., https://heycurio.com/). You might be able to design them with safeguards against some of the worst abuses, but kids will absolutely start to form relationships with / dependencies on them. I'm generally in favor of kids learning how to interact with AI in healthy ways as they build future-ready skills. However, I'd like to see AI elements infused into social emotional learning in school across all ages - things like understanding that chatbots can mimic empathy but not feel it, building the ability to set engagement limits with tools that are designed to prolong conversations, reflecting on the tradeoffs in turning to an infinitely patient chatbot for emotional support that mimics what you want to hear vs. a peer or a mentor (who might be more likely to push back). SEL currently teaches our kids to have healthy human-human relationships. I think the platform could be used to teach about healthy human-AI relationships as well. I explored what that might look like here: https://mikewhitaker1.substack.com/p/beyond-ai-literacy-a-future-ready?r=mld5. I'm planning to go to school board meetings this week to suggest this approach.

Thanks for that, Marc - all interesting. So one by-product of age-verification might be to further entrench the power of the big tech corporations?