In our era of AI acceleration, it's crucial to pause and consider these innovations' deeper implications on creativity. Ethan Mollick's assertion that "the AI you use today will be the worst AI in your lifetime" is a compelling hook that draws us into a world of endless possibilities with genAI, yet it begs an important question—use at what cost? The implication of generative AI’s impact on society is not just about the environmental footprint or the economic ramifications but about something more profound and personal—the impact on our very way of creative thinking. As we integrate generative AI into our lives, we stand at a crossroads where we could gain and lose simultaneously, reshaping our cognitive landscape in ways we don’t fully understand.

The Beginner’s Mind

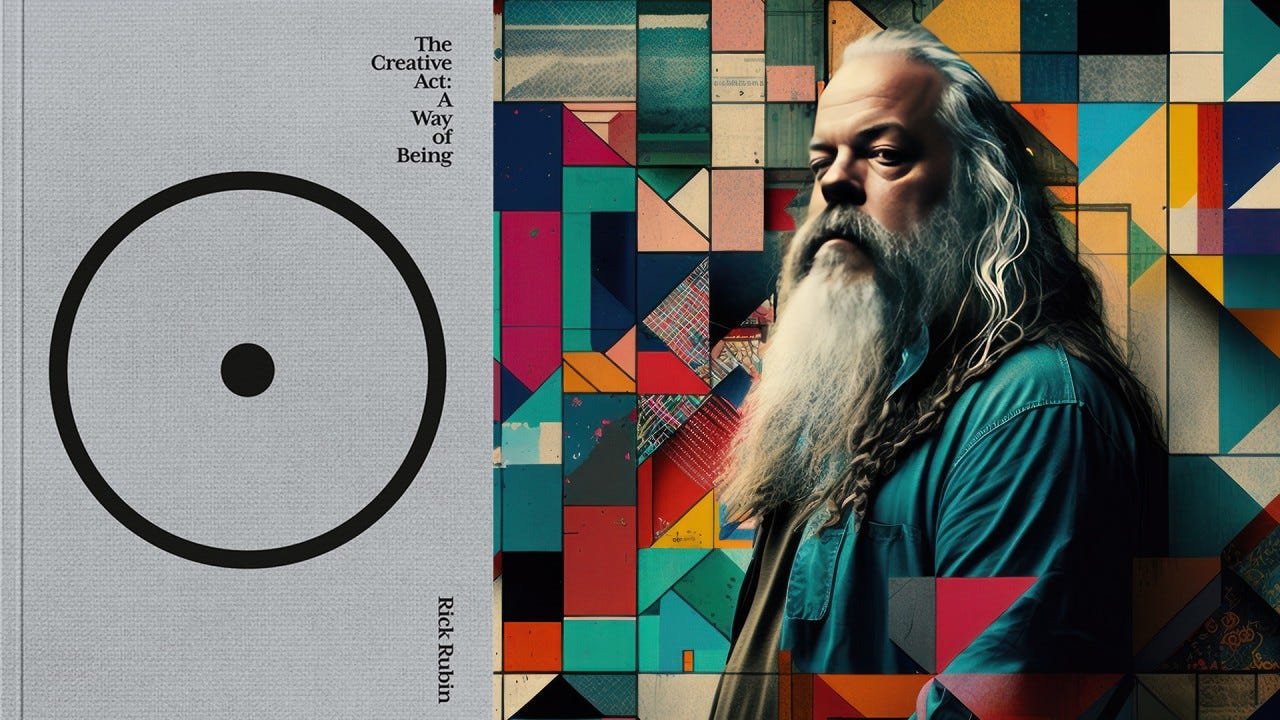

Consider Rick Rubin's insights in The Creative Act, one of the best books I’ve read dissecting the intricacies of artistic creativity. Rubin, with his Zen-like approach (amplified by his iconic beard), delves into the realm where art and AI intersect in Beginner’s Mind. This chapter offers the story of AlphaGo, an AI program, defeating a human grandmaster in Go—a moment that not only marked a technological triumph but also a profound shift in Rubin’s perception of creativity and possibility.

Upon first hearing this story, I found myself in tears, and confused by this sudden swell of emotion. After further reflection, I realized that the story spoke to the power of purity in the creative act. What was it that allowed a machine to devise a move no one steeped in the game had ever made in thousands of years of play? It wasn’t necessarily its intelligence. It was the fact that the machine learned the game from scratch, with no coach, no human intervention, no lessons based on an expert’s past experience. The AI followed the fixed rules, not the millennia of accepted cultural norms attached to them. It didn’t take into account the three-thousand-year-old traditions and conventions of Go. It didn’t accept the narrative of how to properly play this game. It wasn’t held back by limiting beliefs. And so this wasn’t just a landmark event in AI development. It was the first time Go had been played with the full spectrum of possibilities available. With a clean slate, AlphaGo was able to innovate, devise something completely new, and transform the game forever. If it had been taught to play by humans, it most likely wouldn’t have won the tournament. One Go expert commented, “After humanity spent thousands of years improving our tactics, computers tell us that humans are completely wrong . . . I would go as far as to say not a single human has touched the edge of the truth of Go.”

To see what no human has seen before, to know what no human has known before, to create as no human has created before, it may be necessary to see as if through eyes that have never seen, know through a mind that has never thought, create with hands that have never been trained. This is beginner’s mind—one of the most difficult states of being to dwell in for an artist, precisely because it involves letting go of what our experiences have taught us. Beginner’s mind is starting from a pure childlike place of not knowing. Living in the moment with as few fixed beliefs as possible. Seeing things for what they are as presented. Tuning in to what enlivens us in the moment instead of what we think will work. And making our decisions accordingly. Any preconceived ideas and accepted conventions limit what’s possible. We tend to believe that the more we know, the more clearly we can see the possibilities available. This is not the case. The impossible only becomes accessible when experience has not taught us limits. Did the computer win because it knew more than the grandmaster or because it knew less? There’s a great power in not knowing. When faced with a challenging task, we may tell ourselves it’s too difficult, it’s not worth the effort, it’s not the way things are done, it’s not likely to work, or it’s not likely to work for us. If we approach a task with ignorance, it can remove the barricade of knowledge blocking progress. Curiously, not being aware of a challenge may be just what we need to rise to it.

Rubin’s view of using AI as a tool to help humans form creative breakthroughs is heartening. By having the vastness of possibility played out before us, pushing us to reconsider our preconceived notions of what we should do, this near-alien mechanical process might allow humans radical, innovative thinking that breaks existing paradigms. In this instance, AI’s fundamental weakness is flipped on its head. It matters that the machine learning system operates within a defined set of rules and lacks the ability to understand the cultural, historical, and philosophical contexts of the activities it engages in. Having such knowledge might actually be a hindrance in ways we lack insight.

Perhaps the closest generative tools that embody Rubin’s ethos around novelty are Google’s recently launched TextFX and MusicFX, designed with Lupe Fiasco and Dan Deacon. A user can prompt TextFX to explore language and have generative AI assist in showing unusual and incongruous pairings of words to form lyrics before testing them out in MusicFX’s generative beats. This is guided novelty using a blend of human actions to shape generative technology to support creativity.

However, the problem I have with Rubin’s overall approach to The Beginner’s Mind, as heartening, thoughtful, and utterly human as it may be, is that his focus remains on praising novelty for the sake of something unusual. Rubin spends a great deal of time critically reflecting on how rules and traditions hold us back creatively, but not much time is given to the potential issues that come from allowing something so new to influence your thinking.

Convergent Thinking

There’s a subtle danger to our creativity when exposed to new things, especially when we don’t pause and view them within the context of traditions. Arthur Cropley explored this in In Praise of Convergent Thinking, arguing, “in practical situations, divergent thinking without convergent thinking can cause a variety of problems including reckless change.” In other words, novelty without insight into existing practices isn’t all that helpful for creative thinking.

In Rubin’s mind, the novelty of losing to an AI could help serve the creative act and force the Go grandmaster to reconsider his choices, his cultural influences, and training and move into new heights, exploring the game of Go in ways he’d never foreseen. But that’s not what happened; the grandmaster retired from the game— forever. Being exposed to divergent thinking (shit, WTF move did this machine make) did not allow him to move past his shock into evaluating the move, what Cropley calls convergent thinking.

The story of the grandmaster who retired after his defeat to AlphaGo is a cautionary techno-tale. It shows that exposure to radical, AI-driven divergent thinking, without the balance of convergent reflection, can lead to unexpected, sometimes disheartening outcomes. It's a reminder that while AI can push the boundaries of what we think is possible, it also raises questions about the sustainability and depth of the innovations it drives. Let me also mention both Cropley and Rubin embrace a tremendous amount of nuance in their approaches to creativity. Both ultimately argue that we need novel and convergent thinking to unlock our full creative potential.

Since ChatGPT’s launch, we’ve been awash in a whole sea of novelty, without much attention paid to evaluating what text generation might mean for creative thinking. The speed of its launch and the rapid bursts of text don’t lend well to reflection. Already, our days are marked by frantic movements on stationary screens, exchanging emojis with digital strangers, and interactions increasingly mitigated by algorithms we have little hope of ever understanding. Our digital lives are ones too vivid for thought.

Without a doubt, people are using ChatGPT and countless other generative systems creatively—that’s never been the argument. However, how much of that use moves beyond novelty for creativity? Few have advocated for anything but an all-or-nothing approach toward AI when it is clear that we desperately need balanced approaches that respect the wisdom in traditional methods while being open to new possibilities that can lead to more sustainable and meaningful advancements.

If You Build It, Maybe They Will Come

If generative AI is going to have an impact on society beyond disruption, human beings are going to need to incorporate it into their daily practices, habits, and rituals; otherwise, it will remain another Silicon Valley hyped-marvel. Part of the acceleration we’ve seen from OpenAI and others involves saturating existing markets with generation via API. Seemingly overnight, most of our existing apps now have generative features built into them. But easy access doesn’t equate to adoption.

My existing practice as a writer was shaped for decades using tools and techniques that became habitual to support my writing. I write in a word processing program, as much out of familiarity than habit, so when I was exposed to a chat-based interface that generates gobs of text, I’m unable to see past the inherent limitations of the interface and what it can do to support my existing practice.

But, when I examine generative AI through the lens of convergent thinking, I’m better able to put AI into the context of historical traditions, and doing so helps me move past some of the limitations of the milquetoast chat interface. When I engage the technology in an app, such as Lex, a word processor with AI built into functions that augment, instead of replace, my writing, I’m much more inclined to adopt these features as part of my writing practice.

In this context, Jane Rosenzweig's approach to exploring generative AI in her writing course becomes particularly relevant. By framing the discussion around the question, "To what problem is ChatGPT the solution?" she invites a critical examination of the role and impact of AI in our creative endeavors. This perspective is essential if we are to harness AI's power responsibly, but I’m not sure there’s a way to integrate it into our lives so that it enriches rather than diminishes our human experience. We may find ourselves dividing on opposing philosophies over the same question.

I find chatgpt and generative AI more overwhelmingly as the demon thag will eventually making human thinking irrelevant. This also what Tyler Cowen expects.

Is this a good thing?