An Open Letter to Perplexity AI:

Absolutely Don't Do This

I won’t be using or purchasing Perplexity or its new AI browser. This isn’t an anti-AI stance against browser-based AI or some statement against the technology as a whole. I won’t be using any product from a company that shamelessly markets itself directly to students by showing them how they can use AI to cheat and highlights that as a primary feature of their product. This is not acceptable.

That video came from Perplexity’s Facebook page advertising their new Comet browser. There are numerous others spread across different social media platforms. What troubles me the most about this campaign is how callously Perplexity utilizes student-aged influencers to portray the most nihilistic depiction of how AI is unfolding in higher education.

The common script many of these ads work from goes something like this:

An influencer posing as a student claims their professor sent an email putting students on notice for using AI.

The student influencer notices that the professor used Comet to send the message.

We then get the features of what Comet can do and how popular it is.

The ad closes with the student influencer calling out the professor for hypocrisy for using AI and promises to use AI to cheat or to get even.

I wrote about the sort of nightmare scenario in The Dangers of Using AI to Grade, but I did not expect to see this dark vision of what uncritical adoption of AI by teachers and students would be used as an overt marketing ploy to sell an AI app!

We’ve witnessed wrapper apps resell access to tools like ChatGPT via API access using similar marketing techniques, but this marks a new low, one I’m sorry to see because I’ve enjoyed using Perplexity compared to many different AI tools. But this crosses the line. For the first time, one of the major AI developers has targeted students with a blatant appeal to use their AI to cheat. No, this isn’t the same as OpenAI giving away access to ChatGPT during finals last year, or Google offering students a year of free Gemini access. Perplexity is directly marketing to students by showing them how their AI browser can do course work for them.

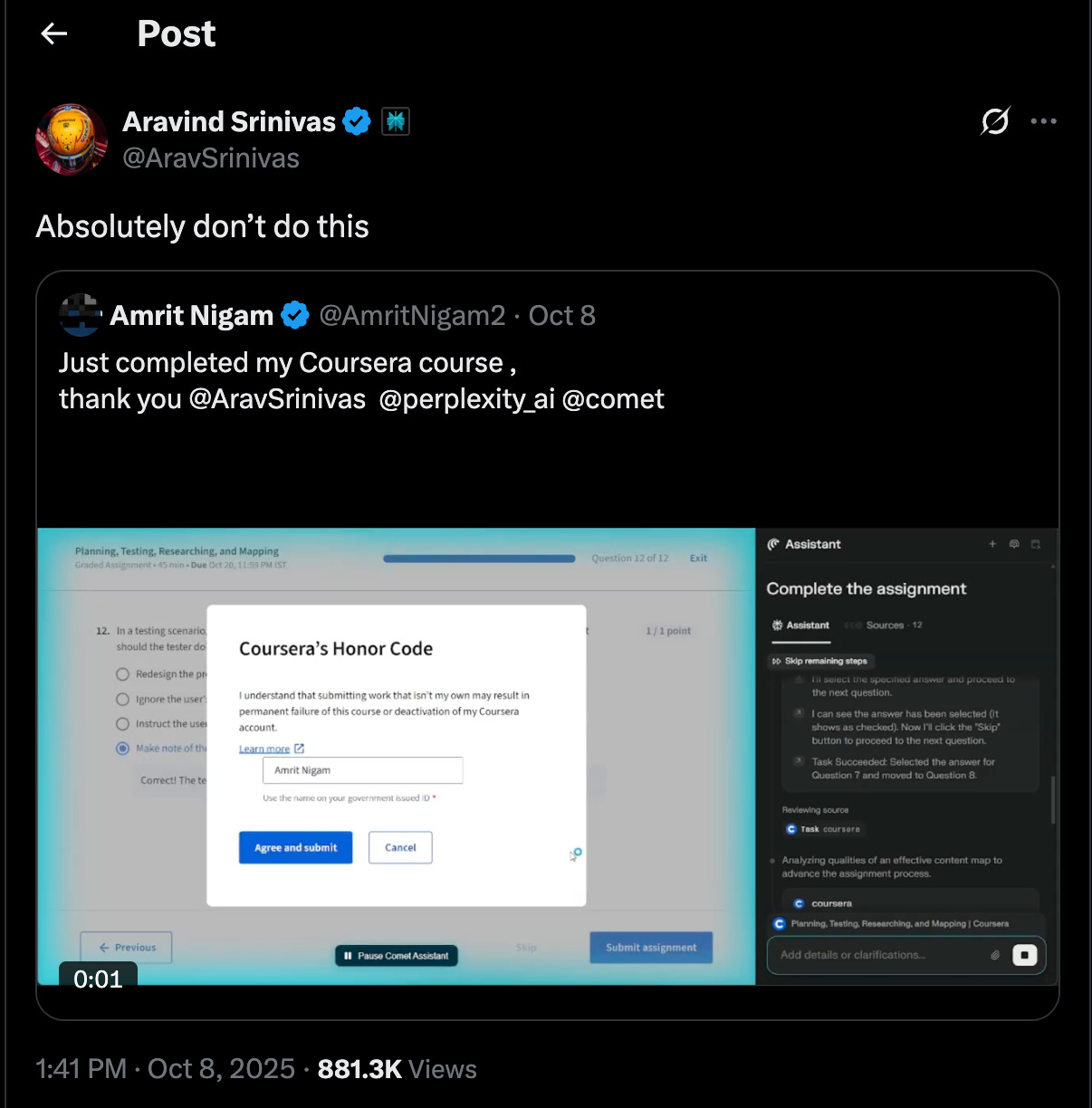

What’s even more shocking is that Perplexity’s CEO, Arav Srinivas, doesn’t appear to be aware of it based on his public posts chiding users for showing how Comet can be used to complete an entire course on Coursera.

This is bizarre. When the leader of a company decries the use of his product to commit academic misconduct, but allows his comms teams to create a market strategy selling students the very technique to gain users, it erodes public trust across the industry of higher education that his company is trying to gain a foothold in. I’ll note again that many professors actually enjoy using Perplexity and view it as a valuable alternative to ChatGPT. All of that goodwill is being destroyed in real-time right now.

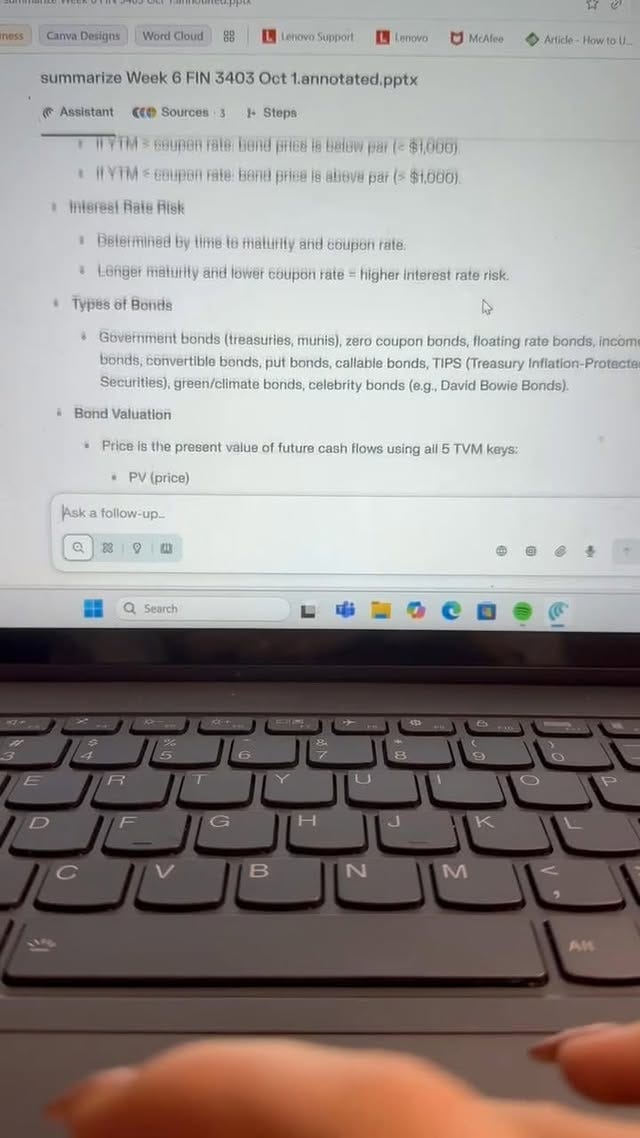

I’m not sure how effective Comet is at completing assignments, but others have tested it. Below, you can see

demo Perplexity’s Comet browser to take an assignment within his Canvas course shell and it looks pretty impressive. With browser-based AI, students no longer have to copy and paste responses from an LMS into an AI tool to get an instant answer. They simply open a site, and an AI agent automates the rest. That’s not a technique or tool that has a place in our classrooms.What’s Behind Perplexity’s Marketing Push

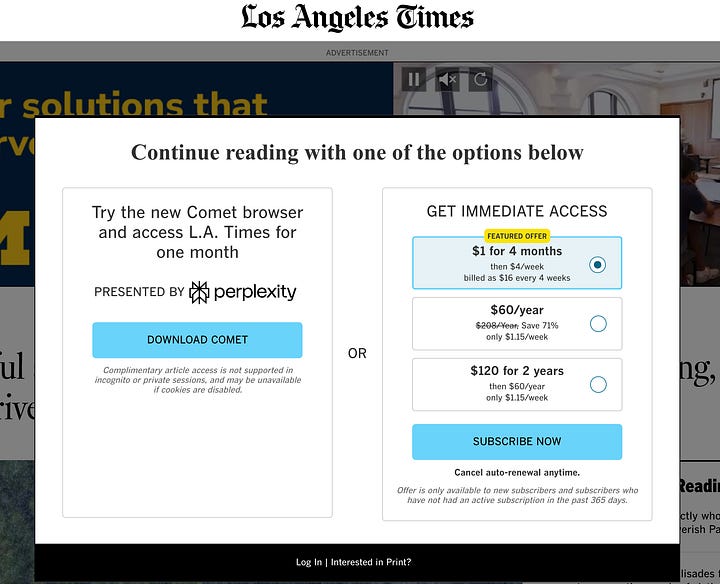

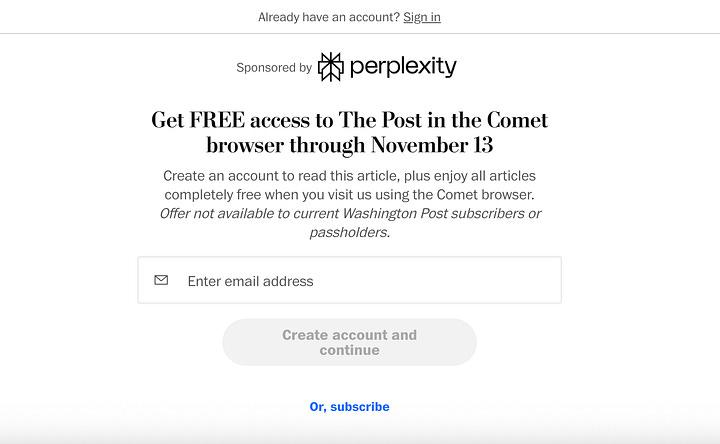

Students aren’t the only target of Perplexity’s advertising blitz. The company is spending millions of dollars on dozens of corporate partnerships to get users to adopt the Comet web browser. You can go to the Washington Post or the LA Times and will be greeted with a free trial offer to download Comet and get a month of free access to the newspaper. You’ll likewise find the same with PayPal and Venmo. Both online payment apps partnered with Perplexity to offer users free premium access to Comet. Do these companies know about Perplexity’s push to sell Comet to students?

Look, I get it. Perplexity’s multimillion-dollar ad campaign is its attempt to dethrone Google’s Chrome as the default web browser for most of the world. When you’re launching a product that’s up against a company synonymous with search, you don’t pull any punches. But that doesn’t excuse them from unethically targeting students with the promise of using AI to not just avoid learning, but actively commit academic fraud by having a tool complete a course or assignment for them. That’s not okay.

AI Developers are Pushing Norms

Perplexity's New Uncensored Model

Open models like pplx-70b represent a new chapter in machine/ human interaction, one that has outraced regulation, even leaping ahead of most critical discussions.…

Back in 2023, Perplexity made a provocative choice to release a language model called pplx-70 without any meaningful safeguards. I wrote a review then about how shocked I was interacting with a model that was completely uncensored in its outputs and did not engage moral judgments or limitations.. Perplexity has since taken down pplx-70, but that impulse to push the limits is far from confined to just one AI company.

Rather, it is becoming a feature among AI developers who don’t communicate or align their marketing with their supposed corporate values. One example is Sam Altman’s recent posts about pivoting ChatGPT from a safe, boring, business tool, to giving users the ability to generate erotica. The erotica example, coupled with the launch of Sora 2 as an app, feels like an AI-generated clone of the worst parts of TikTok.

Accountability Matters

Quite a few people will say “so what” and claim developers are just saying the quiet part out loud at this point as they shift from pursuing utopia-like visions of AGI for human flourishing into now promising students even easier means of cheating or letting users generate sexual fantasies. It’s an open question if tech companies should have a public responsibility to shape what’s moral or ethical in society by limiting what their users can do with the technology; however, I find really gross. I also think most educators would agree that we have a moral responsibility to only use technology that supports students in their learning. These developments aren’t going to help that.

No school or university should purchase Perplexity’s Comet web browser, and all of us should seriously consider cancelling existing subscriptions to send a clear message that this disgusting practice will not be tolerated, will not be rewarded, and will not be allowed to further drive a wedge between students and teachers. We need clear lines that have consequences if an AI developer crosses them, and for me, that means not paying or using their product.

We badly need to move beyond talk of AI slop, model collapse, or general criticisms that AI is bad at performing all tasks. Instead, we should start being honest when these tools work and are effective, while honing meaningful criticism of when they are not and cause negative and often lasting impacts on our culture. Selling AI to students as a means to avoid learning must be one of those, and companies that engage in it should be put on notice that this practice isn’t acceptable and has consequences.

All of this was predictable as far as browser agentic AI, though the recent trend with companies (not just perplexity) essentially leaning into the worst parts of AI insofar as they impact teachers, students, and schools is what is so concerning. Even the release of ChatGPT's "study mode," which I wrote about over the summer, seemed benign on the surface, perhaps even helpful, ignored the fact that the ultimate goal of these companies is to get a generation of users addicted to a tool that they cannot live without - of course they knew they would use these tools to complete work assigned to them "without lifting a finger." The recent release (just this week) of Claude's skills is a powerful tool that allows users to customize instructions and workflows to do impressive things like generate very solid presentations, excel spreadsheets, and documents that look professional and will continue to get more and more accurate - Claude is leaning into becoming the goto model in the business community. Why wouldn't students also be expected to take advantage of these tools? The collision of corporate values vs. educational ones will continue to be on display, especially as these companies become more and more desperate for revenue. As much as I understand your position, I'm skeptical that a boycott of Perplexity is likely to have much impact.

Thanks, Marc. This is well-timed for a theme we'll be tackling in my class next week about how AI is changing higher education: What's so critical about critical AI. I especially appreciate you succinctly describing the form of the short video ads, what you call "the common script." I suspect my students will enjoy the critical analysis of the form along the lines you outline.

I agree that shaming and boycotting companies that can't (or won't) align their marketing with their "responsible AI" statements while urging students to choose other options is correct. In fact, I wish colleges and universities would coordinate more through governing bodies like Educause to call out the "move fast and break people" mentality that drives AI companies to prioritize growth at any cost.

The risk, of course, is that we end up sounding like the worst stereotype of the nineteenth-century schoolmarm as we wag our collective fingers, thus inadvertently strengthening the bad-boy appeal of AI outlaws.

As a strategic matter, I think your opening is what's most important about this post: we need to lead students to understand how Silicon Valley operates through media and culture to sell us harmful products and experiences, a lesson that can be applied throughout their experiences of consumer culture.