Google released their first public model to compete with ChatGPT and the results are . . . underwhelming, but the implications are anything but. The stakes are extraordinary and far-reaching (no, I’m not talking about thinking machines!) If Google, Microsoft, and Amazon cannot align their generative AI models with existing productivity tools aka ‘talking to your data,’ then we may have front-row seats to one of the largest tech meltdowns since the dot com bubble twenty-five years ago. That doesn’t just have implications for tech companies—the ripple effect from the fallout could cause massive disruption to the economy.

Using AI to talk to your documents, the holy grail of workplace use cases for LLMs, is part of Retrieval Augmented Generation (RAG). It is the moonshot Google and Microsoft have hitched their wagons to with LLMs. Google is poised to gain or lose the most from this current generative AI cycle, and so far, they’ve managed to be one step behind in terms of LLM capabilities, while also being by far the most forward-thinking in terms of interface design. If Google successfully upgrades its underlying systems with Gemini, and somehow figures out its issues with RAG, then they are poised to become the leader in the genAI space. But, if they cannot overcome the technical hurdles present in their current system, if users can’t talk to their documents, then Google Lab’s AI experiment will go down as one of the most expensive and reactionary tech disasters in recent history.

Google Calls a Code Red

You might not know (or care), but Google invented the transformer responsible for LLMs. The problem at the time was they didn’t see much use for it and decided to open-source the technology and publish a research paper announcing the invention. This was back in August 2017. The following year, a little-known start-up at the time took the underlying technology to build the first foundational language model they dubbed a generative pre-trained transformer—OpenAI’s first GPT. It’s wild to think that was only six years ago! Google’s subsequent panic after OpenAI launched ChatGPT a year ago has been nothing short of reactionary. They’ve been trying to play catch up ever since, with some lackluster results. But Google has a leg up on nearly everyone in the LLM game—search. We’ve come to see ‘googling’ something as synonymous with searching the internet. This isn’t the only leverage Google has, they own everything from YouTube to cell phones, and control a significant chunk of cloud computing. Let’s take a stroll through what Google’s current genAI features are.

Google Labs

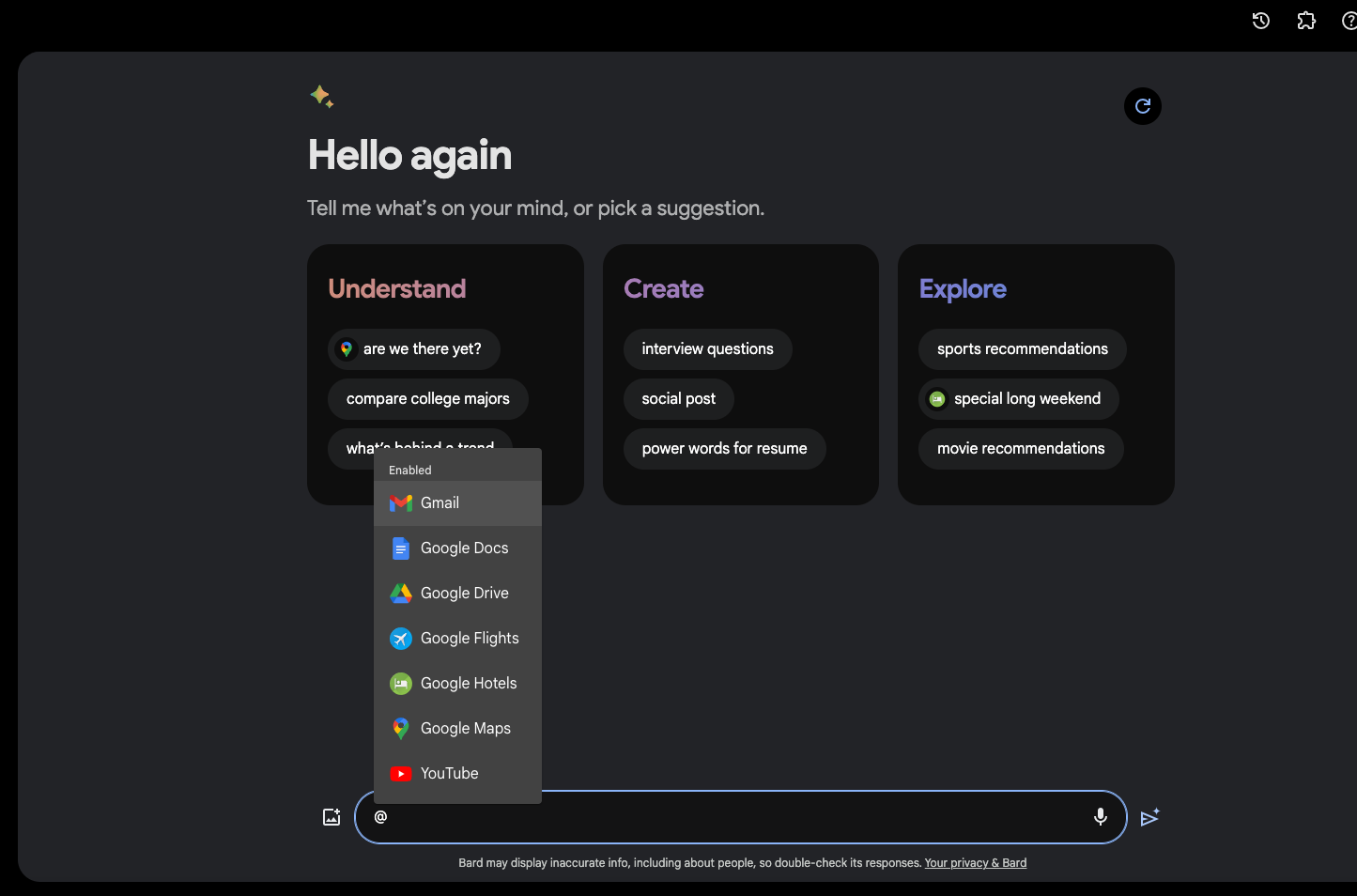

You can access a tier of Google’s new Gemini model via Bard now, but it’s not quite as powerful as GPT-4. All of Google’s features for the genAI experiment can be activated via Google Labs. And there are a lot of them. For this article, I’ve activated Google Search to show you how their Generative Search Experience impacts internet search and Google Workspace to activate it in their G Suite. I’ll also show you how they’ve recently upgraded Bard, their competitor to ChatGPT, to interact with your data. Be aware, that you may want to use a secondary Google Account, as activating this will expose all of your data in your Google Drive to genAI.

Generative Search Experience

GSE is the acronym Google is using for its Generative Search Experience feature. Each time you search, you can optionally generate a linked-summarized overview of your search, ask follow-up questions, or engage with pre-generated prompts to help you refine your topic. It works fairly seamlessly and is pretty intuitive. Unfortunately, most of the generated sources are of low quality in relation to my search. Occasionally, GSE will give me mainstream sources, or sources from Wikipedia or other scholarly databases, but most of the generated stubs are from click-bait sources.

Google Workspace

Most of the features rolling out throughout Google’s Workspace G suite of tools are fairly milquetoast in comparison to what we’ve seen demoed from Microsoft or other third-party applications using OpenAI’s API; however, there is a major catch here —Google is connecting your data to its platforms using genAI. And that’s something we haven’t seen outside of Microsoft’s limited demos for Copilot. Let’s start with the G Suite.

Sheets

Tepid demo for how users can create pre-fillable rows and data using their Sheets feature. It hasn’t been hooked up to your data just yet, so it will generate pretty random material at this time.

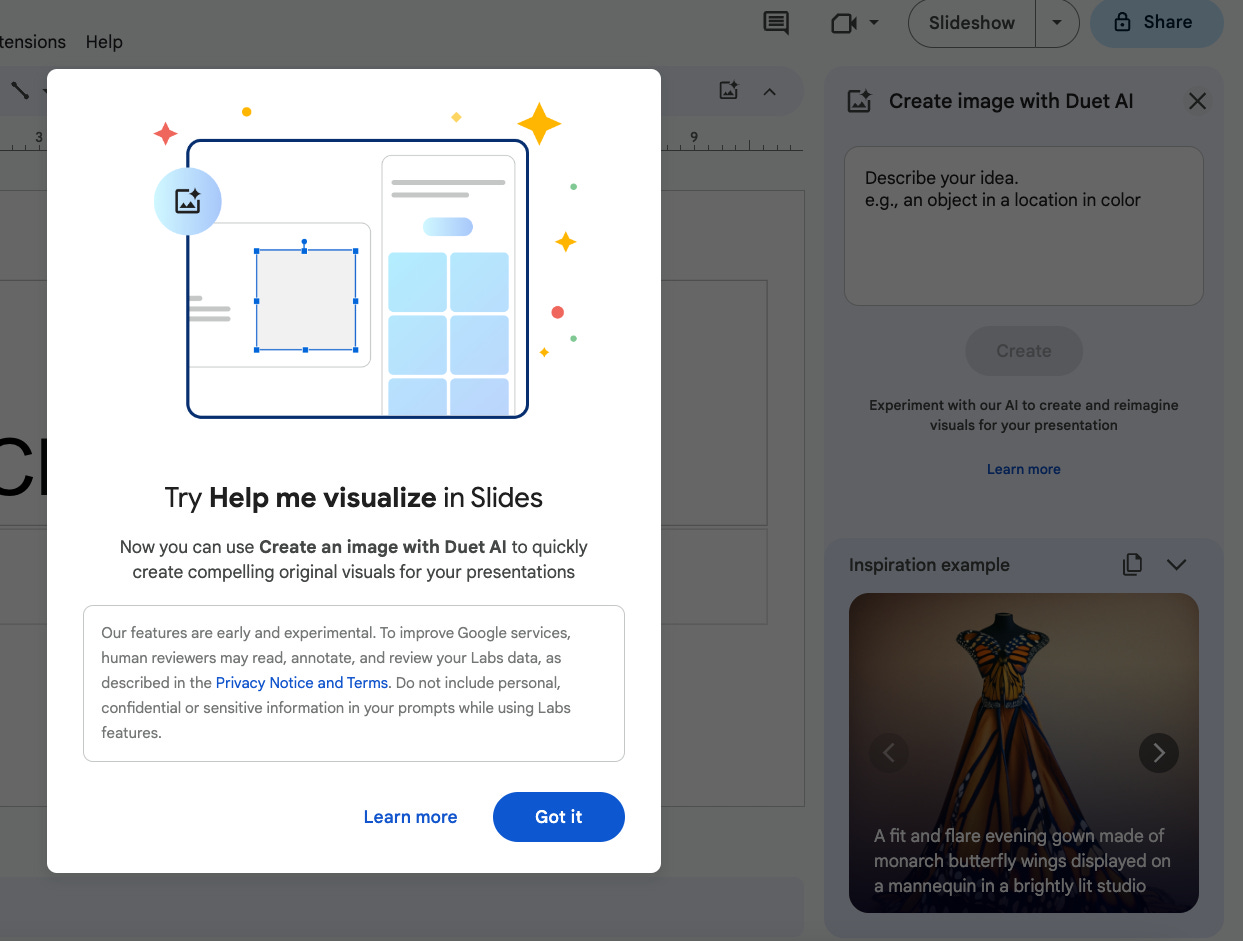

Slides

The sort of ah-ha moment many reported with Microsoft’s Copilot of selecting a doc and using natural language to transform the content into a PowerPoint presentation is sadly not present in Slides. What users get instead is an image generator that is pretty underpowered compared to DALLE-3 or Stable Diffusion.

Docs

The genAI features built directly into Google Docs are pretty irritating from a teaching and learning standpoint, as the only thing to announce itself is a magic wand with the phrase “Help Me Write”. There are some interesting templates you can generate that are unique, but not all that interesting. As I mentioned earlier, since Google is using an older, underpowered LLM, it means its ability to generate material is less capable than OpenAI’s GPT or Microsoft’s Bing.

Where Things Get Interesting-Bard Integrated With Search

The Workspace features are ho-hum to me, mostly because RAG isn’t working out the way developers envisioned, but Bard’s recent update to Gemini allows you to interact with your data directly. The caveat of course is the LLM is underpowered and hallucinates a lot compared to GPT-4, so it's currently really limited in terms of its use cases. Below are a few examples with YouTube and Hotels.

Limited as it may be now, I think the implications are pretty profound if/when they improve it. Virtually all the data I’ve collected from my pilots with teaching LLMs with my students indicate use of genAI is related to how intuitive the interface is in supporting a user with a particular task or augmenting an existing skill. As a tool this can be immensely useful, but only if it works!

Most recently, Anthropic announced a simple prompting technique that allowed their model to correctly identify single sentence retrieval in nearly 500 pages of text:

We achieved significantly better results on the same evaluation by adding the sentence “Here is the most relevant sentence in the context:” to the start of Claude’s response. This was enough to raise Claude 2.1’s score from 27% to 98% on the original evaluation.

A single sentence added to the prompt was all the system needed. That’s astounding and extremely troubling because it shows us how little even the developers and engineers building these systems know about them. It also adds to the mystery and hype surrounding the underlying technology.

Such simple solutions beguile greater problems—human beings want autonomous systems to recall memory and items lost to them and serve their needs on tasks that are intrinsically personal and localized, often blurring the lines between agent and tool. This desire to anthropomorphize an algorithm fuels so much of genAI adoption that we haven’t paused to ask ourselves if we’re going to be satisfied with a workplace productivity tool or if what we really want is a second-brain. One that isn’t quite human or conscious enough to be a threat, but still alluring enough for us to invest in emotionally as something more than an mere program.

A TV Recommendation

As a bit of an aside, I have a TV recommendation for you—Scavengers Reign on MAX. This show is a trip. It takes the stranded on an alien world scifi premise and makes it delightfully weird. The best comparison I can make is the series feels like a cross between Solaris and Annihilation. The characters are all well-developed and the animation is crisp. This isn’t something to binge, but more of a one episode per day experience to give you time to process what you just witnessed.

Hey, Marc,

Let's say I am using a LLM to find good quotes to insert to an existing draft I am writing.

What would be your suggested pathway for the best results?

Asking for a friend!

This is a really great summary, thanks for diving into this.