Higher Education Needs Frameworks for How Faculty Use AI

The anticipation surrounding GPT-5 created a dizzying buzz among tech enthusiasts who were obsessed with the idea that true artificial intelligence, known as AGI, was imminent. OpenAI’s bungled launch of GPT-5 and less-than-stellar performance from this new series of models caused much of the hype around AGI to disappear. GPT-5 is a good model with some marginal improvements, but nowhere near actual intelligence.

The challenge for much of society is that you don’t need AGI to create chaos in our world—we have that already with existing AI systems. The erosion of trust that comes when a person or company uses an AI tool to mimic a human-like response will be felt once more in classrooms across the country this fall, but many people are experiencing it throughout public life as well. Stories of police officers using ChatGPT to write crime reports, lawyers using AI to argue civil and criminal cases, judges using the same to decide motions, pharmacists using AI to fill prescriptions, doctors using chatbots to diagnosis patients, and even real estate agents using AI to list homes and draft contracts create mistrust on a scale few could imagine just a short while ago.

I cannot speak for those other fields, but I do think educators must ask what our ethical obligation is if we use this technology in the disciplines we teach. Unfortunately, I don’t think we have any sort of consensus on the matter.

The Freedom to Use, Ban, or Simply Ignore AI

Academic freedom is one of the cornerstones of higher education. Faculty decide how to teach their courses, what topics they believe are appropriate, and how to use tools to facilitate student learning. But what does that freedom mean in our era of algorithmic learning when every faculty member gets to decide an AI policy for the courses they teach? More pointedly, what happens when some faculty decide AI is a valid tool to use to communicate with students, offer them feedback, or even grade them when their colleagues disagree?

I know we’re rightly concerned about how much our students are turning to AI to augment or replace learning in our classrooms, but higher education has only begun discussing how faculty should create ethical guidelines around their own usage of AI with students, how AI should be treated in research, promotion, or institutional advancement. There is no consensus among faculty about where the line should be regarding faculty usage of AI. Some find the very notion of a teacher using AI on students reprehensible, while others liken it to just another tool to help students reach their full potential.

For $20 a month, I could use ChatGPT or an AI agent to automate the hours I spend each week responding to student work with written feedback. Many will cry out that the feedback is soulless, robotic, error-prone, and overly sycophantic. And I would agree with them. However, it is still feedback, and the quantity and thoroughness of it often surpass what I’m able to give a student.

The same goes for personal communication we have with students via email or the requests for letters of recommendation we receive—why don’t we automate those as well? For me, the reason why I won’t be automating those tasks boils down to the fact that I value those relationships I have with flesh and bone humans and don’t want to cede that to a machine. I wrote about my AI policy last week in my Writing 381 course about AI and Writing as an example of trying to communicate this clearly to students:

For our class AI policy, I’m going to start by telling you what I won’t be using AI for and why that is meaningful to me. I won’t be using AI to answer emails, provide feedback, or grade your work. I also won’t be using AI to write letters of recommendation. The reason why I won’t be using AI for these purposes is that it can impact the relationship I have with you, and that is something I value much more than efficiency. You may feel differently, and that’s okay. Any time that I do use AI, I will be transparent about how it is used, including labelling what was generated by a machine. I invite you to craft your own statement about how you will use AI or not use it in this class, focused on what you value about your student experience, learning, and the relationship you have with your peers and me. While your own stance can take many forms and change during the course of the semester, one area I will ask you to respect is openly disclosing when you use AI with me and one another.

But those are my values, not necessarily values shared by my colleagues teaching in other disciplines or even in my own department. What happens when they disagree and decide that AI is perfectly effective for feedback, apt at writing concise emails, or any number of things that impact the time we spend with students and their work?

AI Feedback Goes Mainstream

AI feedback might sound robotic and hollow to some, but it also goes into far more depth than I’m able to give a student. We’re seeing large-scale implementations of nuanced approaches to AI feedback throughout California with their Peer & AI Review + Reflection initiative. PAIRR combines both AI and human feedback to support a student during the writing process and asks them to critically reflect on the process and what this added to their learning. It’s a technique I piloted in my writing sections in 2023 using Eric Kean’s Myessayfeedback tool. When used thoughtfully, I think it truly helped students.

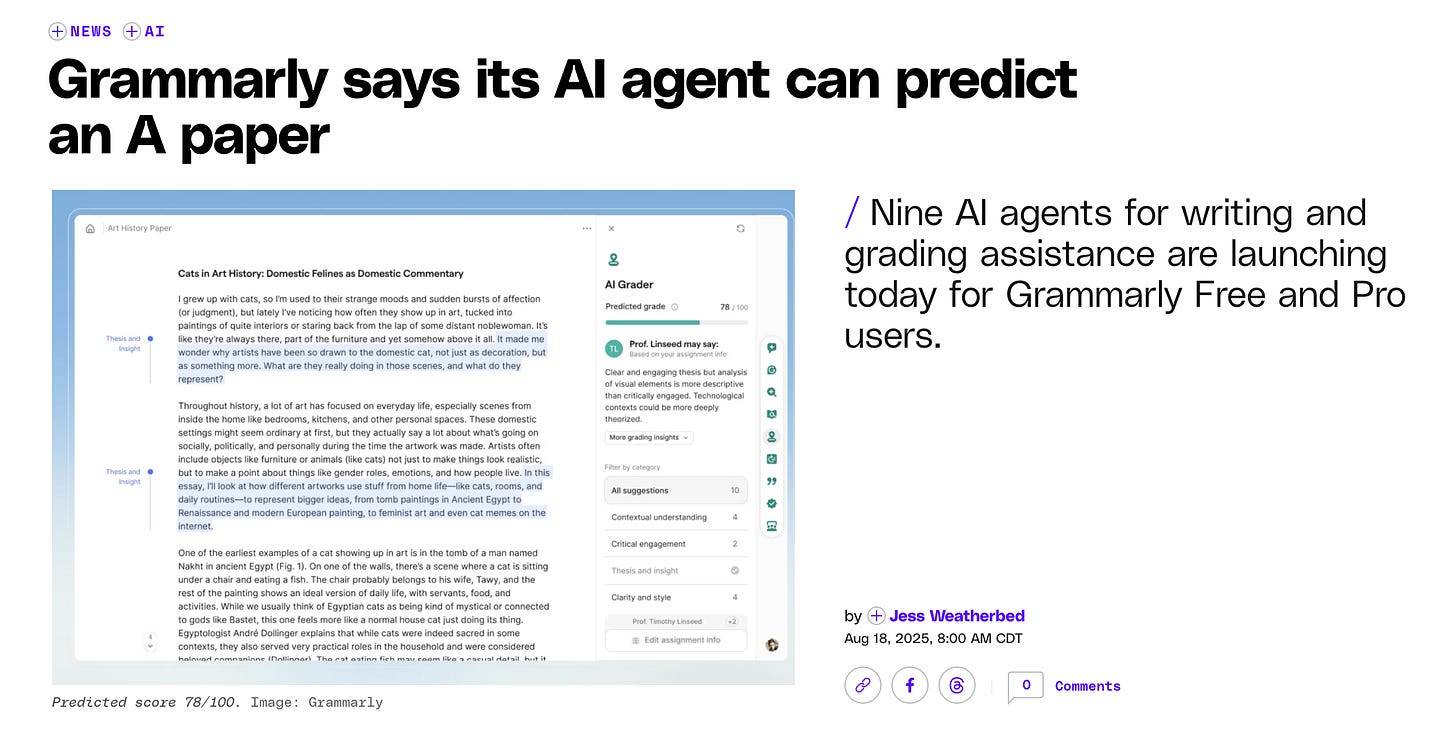

Grammarly is also getting into the AI feedback business, using customized agents to offer students feedback on assignments before they submit them. An agent within Grammarly’s system could give students targeted feedback tied to a rubric, feedback based on a persona, and even search the web for information about the professor and have the internal AI make a judgment about how the professor will grade based on the information it finds.

It isn’t surprising to see this approach being lambasted across socials. Many professors see the use of AI in this way as a threat to their teaching and to reinforcing transactional views of education. Whether that’s actually what’s happening within these features or poor framing isn’t exactly clear at this point.

I think there are increasing questions about equity and fairness beyond just cheating with AI. It’s pretty clear that students who use AI feedback may have certain advantages over their peers who do not. They can revise faster, get more feedback, revise again, and finish long before a human being is able to give them any meaningful feedback. Meaningful is a word I think most of us will focus on, but what a student finds meaningful in terms of feedback is often tied to a grade, while a faculty member might want to focus on other areas, like personal growth.

AI Usage is Ultimately a Labor Issue

When part of your workplace uses tools to automate their labor, saving themselves time and claiming it is making them more efficient, it creates an uneven playing field that makes adopting technology crucial simply to keep up. That’s not hyping AI—it is putting automation into historical context with labor and market forces. Those same historical market forces show us that few jobs or tasks once automated return to their previous state.

Imagine working alongside a colleague who starts automating their classes when you do not, and the impact this has on their day-to-day life. We’re already seeing some K12 teachers turn to AI to save themselves time. Some report saving six hours a week. How exactly is that going to play out in departments across campuses when some faculty automate their workloads while others do not?

Often, non-tenure track faculty such as myself pick up extra sections to help pay the bills and also serve the department by taking overloads. How is teaching overloads going to work in a world where automation increasingly plays a part? Would a chair of a writing department assign an extra section to a faculty member who doesn’t use AI, knowing that the students will likely get less feedback and take more time to receive it, vs. a faculty member who will use AI to give students feedback and perhaps receive better student evaluation scores from students as a result?

The Lack of Institutional Guidance About Faculty Use of AI must Change

Some institutions are attempting to put guardrails around faculty taking student work and throwing them into a random LLM or AI detector that might violate student FERPA rights, but many of these don’t address that most institutions now have access to at least one, if not several, data-protected AI models. A few campuses are forward-thinking and understand that faculty are using AI to give feedback and grade, but stop short of banning the process. Instead, most of the guidelines still call for the faculty member to confirm a grade that an AI system might give a student.

The problem with these guidelines is that they stop short of telling anyone a definitive answer. I know each discipline must deal with AI in its own way, but there should be clear frameworks for when to use AI and when to pull back. Mark A. Bassett’s SECURE AI usage for staff framework is an excellent start, and I highly recommend taking a look at it. Inspired by it, I took a stab at an early draft of a similar framework for faculty to consider here in the US. It’s my hope that I can easily create this framework into a simple decision tree that faculty can consult and internalize when making choices about their use of AI in education.

VALUES Framework for Faculty Use of AI in Education

V-Validate

How can you validate student learning when AI was used?

Are faculty able to use AI reliably in a way that supports student learning?

Is the AI used by faculty transparent and easy to communicate with students?

How is AI being used to support student learning outcomes?

A-Assessment

Using generative AI to assess student learning through direct grading, feedback, or tutoring should be done in a manner that ensures fairness.

Is the use of AI to grade students, automate coursework, or communicate with students done with faculty supervision?

Is there a method for students to audit or challenge a grade used by the tool or service that the faculty use?

Is the use of AI in assessment fair and free of bias that could cause a student harm?

L-Labor

One of the main use cases for generative AI is efficiency and task completion. However, faculty should not use AI solely to save time and be wary of potential consequences to their work because of automation.

Is AI being used by the faculty member to save time, increase efficiency, or because they believe it genuinely improves student outcomes?

Could automation create conditions for institutions to increase faculty workloads, raise the number of students in courses, or justify job cuts?

Does faculty refusal to engage with AI create increased work and what institutional support will be offered?

U-Usage

Faculty usage of AI with students should be narrow in scope and consider the impact automating certain aspects of teaching will have on the student experience.

Will using AI in this instance alter the authenticity of faculty relationships with students or colleagues?

What skills or knowledge might atrophy if faculty automate a task for their teaching or student learning?

What information will students need to know to use AI responsibly in each discipline?

E-Ethics

Faculty usage of AI should model open disclosure with students, set clear guidelines for students who would like to opt out, and avoid deceptive assessment practices.

Will the faculty member’s use of AI be openly disclosed to students in a manner that is accessible and easy to understand?

What options would a student have if they were asked to opt out of an assignment that required them to use generative AI?

Does the institution have procedural frameworks for using AI detection that are fair to students?

S-Secure

Faculty should use AI systems that secure user data in accordance with federal and state guidelines.

What data protection is in place when a faculty member requires students to use AI or loads student work into an AI system?

Does the AI secure personally identifying information in accordance with FERPA and HIPAA?

Did the faculty member gain permission from the copyright holder before entering original works or creations protected by copyright, trademark, or patent law into an AI system?

The VALUES framework is just an idea at this point. There are areas I don’t like about it and feel like I am being too biased and focused more on harms than potential gains from using AI. That’s why I’d value some human feedback on it. We’ve got to start thinking about establishing agreement and norms when it comes to using a technology that is marketed as having human-like intelligence with vulnerable populations like our students, and I don’t really see that happening in broader society.

Love the questions used to engage with the framework. Made me wonder if they could be made into a kind of workflow.

Very interesting post.

My first reaction to this is that many students are already using AI independently of their teachers when it comes to getting feedback on their work. Many in education call that cheating but I think we all know the reality is much messier. Teachers incorporating AI feedback on student work directly into their own process is an entirely different issue. From my experience, a huge part of the problem continues to be that anyone who is responsible for policy-making (at least in K-12 - not sure about higher ed) is so far behind the curve on what AI can or cannot actually do well that they simply have no strong basis on which to make an effective decision. Just like many students have jumped ahead of their teachers in the absence of clear and useful AI guidelines, early adopter educators have also pushed the envelope within schools to the point where it's very hard to pull back from what's already being done. Frameworks are helpful I suppose but who is going to enforce these rules? Administrators? Department Heads? I've seen a number of podcast / zoom sessions with employers and graduate program leaders who are basically saying AI skills are now a must for anyone graduating into the job market going forward. How does that complicate the equation?