I know many of us are returning to the classroom with a sense of unease, even dread about how generative AI will impact the semester. There are dozens of advice pieces about AI in the classroom that drop each day. Instead of adding to the noise with this post, I want to focus on one reason why we have to teach students about this technology and step outside of the AI as academic misconduct discourse.

This fall, we’re going to be awash with AI-generated election disinformation and misinformation. While deep fakes are nothing new, AI adds a new dimension to them. What follows is a short rundown of some of the things current language and multimodal models can do and why we need to double down on teaching ourselves and our students digital media literacy skills in this new AI era. You can jump to the end of the article to find a number of helpful games you can play with your students that can be used to develop their AI and information literacy.

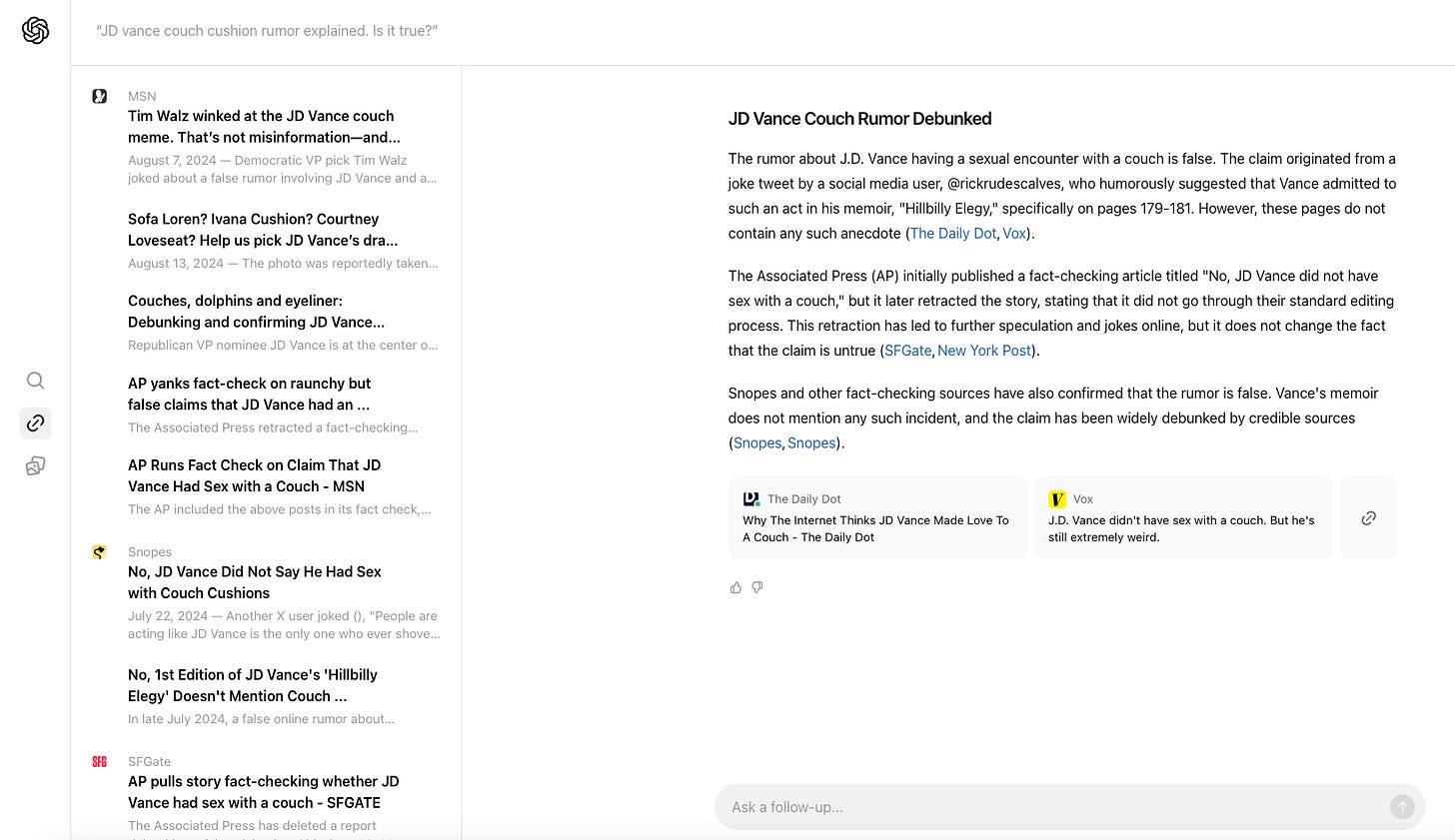

Using AI to Debunk Fake News

Let’s start with some low-hanging fruit by asking SearchGPT about the JD Vance couch cushion rumor that went viral on X. Yes, you can use various AI research engines to debunk fake rumors. I previously wrote about SearchGPT and its limitations in its beta release, but hallucinations are not one of them.

The sources SearchGPT pulled are all accurate and to the point. Students could use AI like this as a powerful tool to help them with parallel reading strategies and vet sources. The catch is, a tool like SearchGPT is powered by AI and licensing agreements with Conde Nast and other publishers. OpenAI isn’t going to allow their proprietary model access to low-quality sources or sources that might damage the trust they’re trying to establish with users. This sort of thing makes parallel reading limited for credibility. If the sources are by default legitimate, then what’s gained by analyzing them may be limited to certain biases. But that’s not the case with other models. Perplexity uses a much broader range of sources in its search and many of these would not be considered credible for academic arguments.

Using AI to Break Bad

Keep in mind, the technology powering ChatGPT isn’t limited to OpenAI or other major companies. Dozens of open models exist that let users and developers program them for anything. This allows users to knowingly create misinformation at a scale we’ve never imagined.

Palisade Research offers the following example of how easily AI can be used to silo our realities, with their FoxVox extension for Google Chrome. Using FoxVox, anyone can rewrite a webpage directly from their internet browser and share screenshots across social media.

Palisade Research launched FoxVox to raise awareness of generative AI’s misuse. They are the latest entry in several projects that have sadly not gotten enough attention to show the general public just how bad things can get when anyone can use generative AI to distort reality and erode public trust.

One such example from last year was CounterCloud. Here, a researcher showed how easy it is to automate disinformation using generative AI.

CounterCloud allows a user to create news sites full of fake and distorted information for a few thousand dollars a month. Mind you, this was last year using GPT-4. Now that OpenAI has launched GPT-4o and cut costs of generative outputs, that number is likely far lower.

Using AI to Create Deep Fakes & Misinformation

The JD Vance couch cushion sex story is absurd, but its viral popularity shows how powerful narratives can be in shaping the nature of our increasingly negative public discourse. X is now filled with generated videos and images of Kamala Harris and Donald Trump. Most of these are incredibly disturbing.

It is shockingly easy to use AI to pile on the political firestorm. In the image below I used Flux’s AI model to create a JD Vance supporter draped in an American flag sitting on top of a pile of well-worn couch cushions, giving the camera a knowing smile.

But that’s not all. I don’t have to settle for images when I can use generative AI to create a video of my deep fake rumor to make it a more attractive meme and increase its chances of going viral. For that, I uploaded the image I generated in Flux to HeyGen Lab’s Expressive Photo Editor tool and used the viral song from TikTok I’m Looking for a Man in Finance.

Teaching Students How To Navigate The Dark Forest

We laugh. We cringe. How often do we reflect on what it means? I did all of the above for free in a matter of moments. Generating material with multimodal AI is so fast, that users may not have time to pause or consider the downstream consequences of their actions. This is why it matters so much to talk to our students about the impact of their actions and their responsibility as consumers and potential users of generative tools in public spaces.

We want our students to be able to identify when information is credible and explore biases, but social media doesn’t afford users the time needed to make any sort of critical judgment beyond a thumb swipe or smashing a like button. The speed we consume content via social media means we’re often living a digital life too vivid for thought. Let’s spend some time this fall challenging that.

Using Games to Help Students Spot Misinformation and Deep Fakes

Last fall, I created an assignment to explore misinformation and disinformation using the Bad News game. The game was developed by the Cambridge Social Decision-Making Lab at Cambridge University and puts the user in the role of a social media monger, whose goal is to gain as many followers as possible by spreading conspiracy theories and false information. Along the way, the game shows students how algorithms that power social media reward their choices. The assignment I put together gives students a chance to process and reflect on how our choices impact the media landscape.

Bad News is about our socials before AI came on the scene, but it remains a powerful activity to help students. Luckily, there are a number of other games out there that can help students understand the nature of AI deep fakes and help them spot them:

Simple: Calling BS’s Which Face is Real?

A straight forward game that asks a user to guess if a face is real or generated by AI. It does a good job of introducing deep fake images using an older type of generative technology.

Scored: Microsoft’s Real or Not

A 15 question image guessing game that goes beyond shapes, using a series of sophisticated real and AI images that pose a challenge for a user. Unlike Which Face is Real, this game is scored and lets you know how you faired against others.

Timed: Google’s Odd One Out

A really challenging game that is timed to mirror the short attention a user gives an image they run across on social media. Not only is the ticking clock stressful, a user also only has a certain amount of guesses before they lose.

Using games can help young learners navigate a complex topic like AI -generated disinformation and deep fakes. But to teach students about generative AI’s impact, we have to first move beyond AI as cheating and ask young learners to analyze it as a tool, a process, one in desperate need of serious inquiry. If we don’t find more moments where we can slow things down and allow students the time to critically explore the rhetorical messages behind the information that slides effortlessly across their screens each day, then we’re missing an opportune chance for them to learn.

Get wise to the statism deception:

https://soberchristiangentlemanpodcast.substack.com/p/s1-statism-deception-3-of-3-rebroadcast