OpenAI’s Education Forum was eye-opening for a number of reasons, but the one that stood out the most was Leah Belsky acknowledging what many of us in education had known for nearly two years—the majority of the active weekly users of ChatGPT are students. OpenAI has internal analytics that track upticks in usage during the fall and then drops off in the spring. Later that evening, OpenAI’s new CFO, Sarah Friar, further drove the point home with an anecdote about usage in the Philippines jumping nearly 90% at the start of the school year.

I had hoped to gain greater insight into OpenAI’s business model and how it related to education, but the Forum left me with more questions than answers. What app has the majority of users active 8 to 9 months out of the year and dormant for the holidays and summer breaks? What business model gives away free access and only converts 1 out of every 20-25 users to paid users? These were the initial thoughts that I hoped the Forum would address. But those questions, along with some deeper and arguably more critical ones, were skimmed over to drive home the main message of the Forum—Universities have to rapidly adopt AI and become AI-enabled institutions.

The Equity Issue:

During the Forum, Harvard’s Mitchell Weiss talked about rushing to purchase ChatGPT Plus plans for all Harvard Business students. He wanted the playing field to be technologically even so that each student had the same level of access to ChatGPT. Now that OpenAI has released GPT-4o for all users and a more niche o1 for paid users, I’m not sure why institutions need to pay for what equates to more time using an otherwise free tool. While ChatGPT remains the dominant interface for people to interact with generative AI, it isn’t like Sauron’s one ring. Far from it. There are models on the market that perform certain tasks better than ChatGPT.

On the surface, purchasing enterprise-level access to a tool makes sense from an equity standpoint, but access doesn’t always equal equity when only few have the attention to keep up with generative AI. With so many models and use cases, only the most advanced users can keep pace with the latest updates and they tend to have to pay quite a bit spread across a number of apps to do so. Institutions aren’t going to adopt that model. Do we really think faculty or students will?

Most likely, we’ll see generative tools shift from enterprise into department and class-level purchasing decisions. I don’t think either is a good thing in the long run because the cost of purchasing these tools will be passed directly to students. AI will become the next textbook level purchase in education. It is extremely unfortunate for students to bear the burden of the cost, but there's no one tool on the market that covers all of the use cases for generative AI. I also don’t see institutions taking any sort of coherent stance on limiting AI. That will remain the prerogative of the faculty member to assign or ban. Those of us hoping for a lull in the chaos of AI adoption or guidance navigating bans aren’t going to catch a break.

The Pedagogy Issue

How students use this technology is complicated, and while the forum did an excellent job bringing together a broad swath of academic voices, there weren’t many critical takes. Ethan Mollick was the most balanced in his approach. He called for OpenAI to work more closely with educators to create approaches that help students learn, instead of using AI as a crutch. He noted that students who are heavy AI users raise their hands less in class and ask fewer questions—a bad thing.

It takes time to mold AI as a copilot and not use it to simply complete tasks for you. One of Mollick’s ideas is to create open-source prompts that force ChatGPT into being a copilot to help tutor students. Why, though, is Mollick doing this and not OpenAI? There isn’t any reason they cannot have a toggle within ChatGPT called “education” that a student could simply switch on and meta prompts behind the scenes turn their sessions from task completion into something that might support their learning.

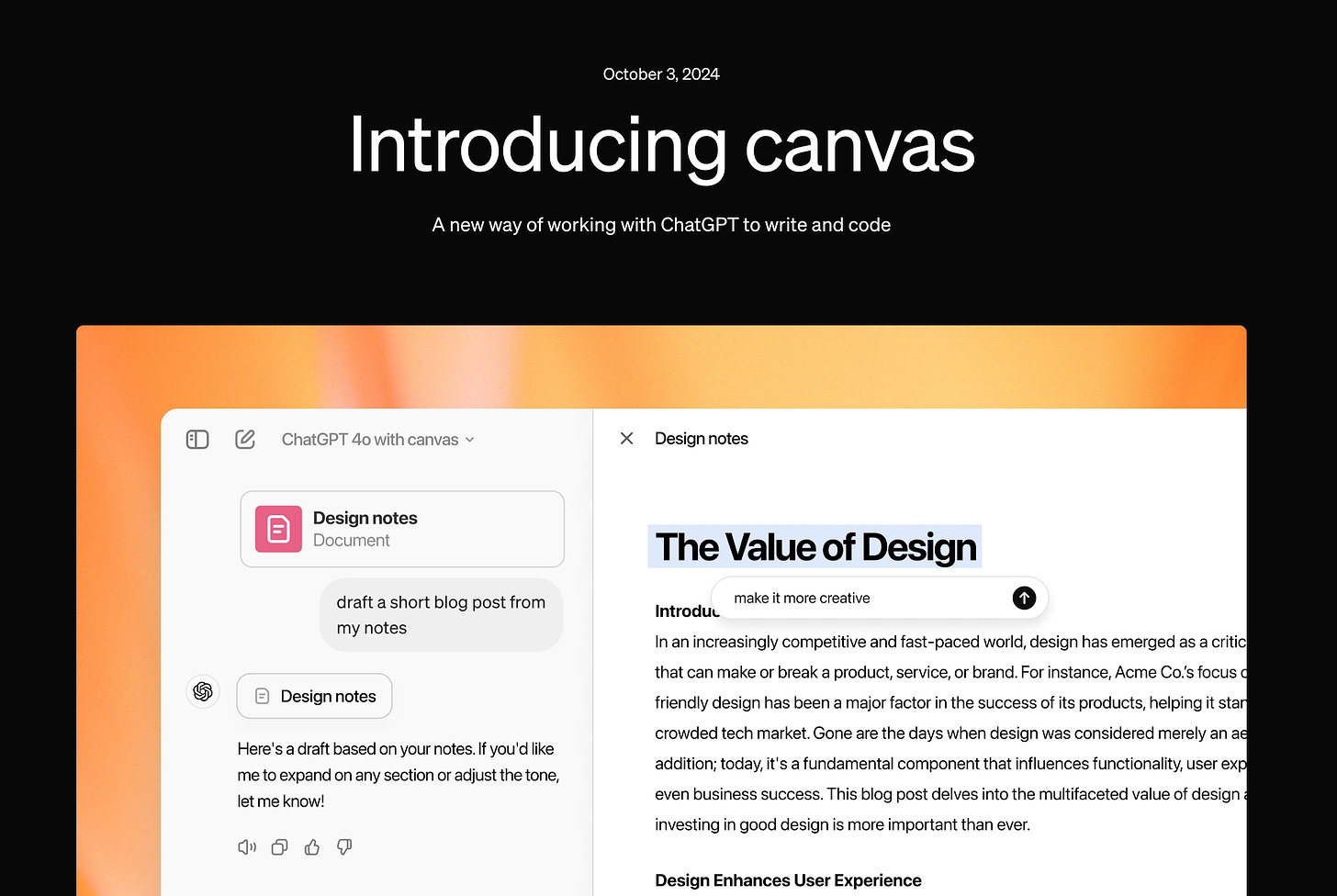

It is baffling to me the level of disconnect here, given the number of students using their app. If companies like OpenAI want higher education to adopt their products, then they should make interfaces that support learning, not offload it or remove desirable difficulties in education. Even the newly launched GPT-4o with canvas feature seems geared toward a UX principle of reducing friction to the point where a user doesn’t even have to prompt any longer. GPT-4o with canvas has toggle switches and dials to expand or make the output more concise, change the reading level from kindergarten to graduate school, a button that generates feedback, and a button to add a final polish of edits to the piece.

Those features are convenient and simple to use, but do they support learning? One motto many of us took up this summer is learning requires friction and interfaces like ChatGPT often remove as much friction as possible from the user. We’ve gone from prompt+instant answer to button mashing for higher quality outputs. Does this equal learning or create the simulation of it?

Many Have Enabled AI Across Institutions Already

Circling back to my earlier point, why should institutions buy access to ChatGPT for their students when most students already use the free version of GPT-4o? 2024 is very different than 2022-23. OpenAI released its 4o model for all users. Plus users get more generations per hour, the ability to create their own Custom GPTs, access to newer, more niche models like o1, and voice, but that’s it. Few institutions are eager to activate voice until we know it is safe for students and we have data to support that. Likewise, the advanced ‘reasoning’ features of o1 aren’t all that useful for most everyday users. Students can interact with Custom GPTs for free and I don’t see many building their own.

What OpenAI appears to be selling is more bandwidth. Is that worth the cost? Many institutions already have enterprise-level AI now included in Microsoft Copilot and Google Gemini. These were bundled with existing licensing agreements, making it a hard ask for institutions to treat AI as an infrastructure cost beyond what they’re paying.

My overall sense is that OpenAI is scrambling for revenue as it switches to a for-profit model. How they do that while maintaining a public version of ChatGPT that is just as powerful as the private, paid-for version, isn’t clear to me. I honestly don’t get the sense they have an answer either. Students will have access to a variety of advanced generative models when they enter school. Most provosts and CIOs understand this. If faculty want more capable or niche tools, then they will ask students to buy them.

We Need to Talk About More Than Providing AI Access

All and all, I’m not sure why higher education needs to invest in AI for students and start budgeting for that when the biggest companies simply bundle the programs into existing software agreements or give users rate-limited free access. There are questions for smaller institutions and community colleges that don’t have those agreements in place, but I didn’t get the sense those voices were brought into the room.

There is excitement about what AI can do in the future, but not much time spent addressing what AI is actually doing to students now. Universities shouldn’t have to spend time and resources to train faculty to make AI effective in education. There’s no reason higher education should bear the burden of making a tool used so frequently by students to make it actually work for learning v. used as a crutch. I’m not sure how we got to this point, but a company valued at over 150 billion dollars has no excuse for passing on the enormous undertaking of making their interface into something more than an autocomplete machine on steroids. Does anyone actually believe we’re going to see faculty and students craft prompts when the newest interfaces embrace button pushing for outputs?

Sarah Friar answered two questions at the Forum before running out of time. The person who sat beside me had a wonderful question and unfortunately didn’t get the chance to ask it, so I’ll do so now, anonymously for them. The question was, what does OpenAI plan to do to support AI efforts at HBCUs? I would hope a representative from OpenAI at a future Forum will answer that question.

Thank you for attending this and recapping it for us, Marc! It's appalling that the majority of ChatGPT users appear to be students and OpenAI is essentially pushing for increased use without any support for students, educators, or schools. Aside from this forum, of course.

It makes me wonder if Sam Altman is serious when he (and others) envision a tutor in every pocket and full privatization of education.

Put another way: I think OpenAI's lack of support for educators is a feature, not a bug.

Quite a move, it seems, to try and shift the conversation towards, "will your institution offer AI access equitably to all your students?"

It skips past the valid questions/concerns being raised about the intersection of AI and education, and also appropriates an equity-driven lens in search of more profit.

(Do I think universities and other academic institutions are going to fall for this? Yes.)