Teaching is Not a Problem for AI to Solve

ChatGPT launched last year and it is a mark of our cultural moment to see people wish a program happy birthday via social media. Since then, it’s been difficult to foresee how generative AI will impact education—the advances have been so rapid in the last year alone. But there is a recent example of how I don’t want to see AI integrated into teaching and learning. This fall, a private school in Austin replaced its teachers with AI. Alpha, a private school, now teaches all core subjects with AI. From the Chronicle:

"We don't have teachers," MacKenzie Price, the school's co-founder, told the Austin news station. "Now, what we do have is a lot of adults who are in the room engaging with these kids, working as coaches." The guides instead help students to clarify academic goals they're working on, Price added."So it's the educational information that regular schools are also teaching with, but AI apps are feeding them to students at the appropriate level and pace that they need," Price told KVUE.

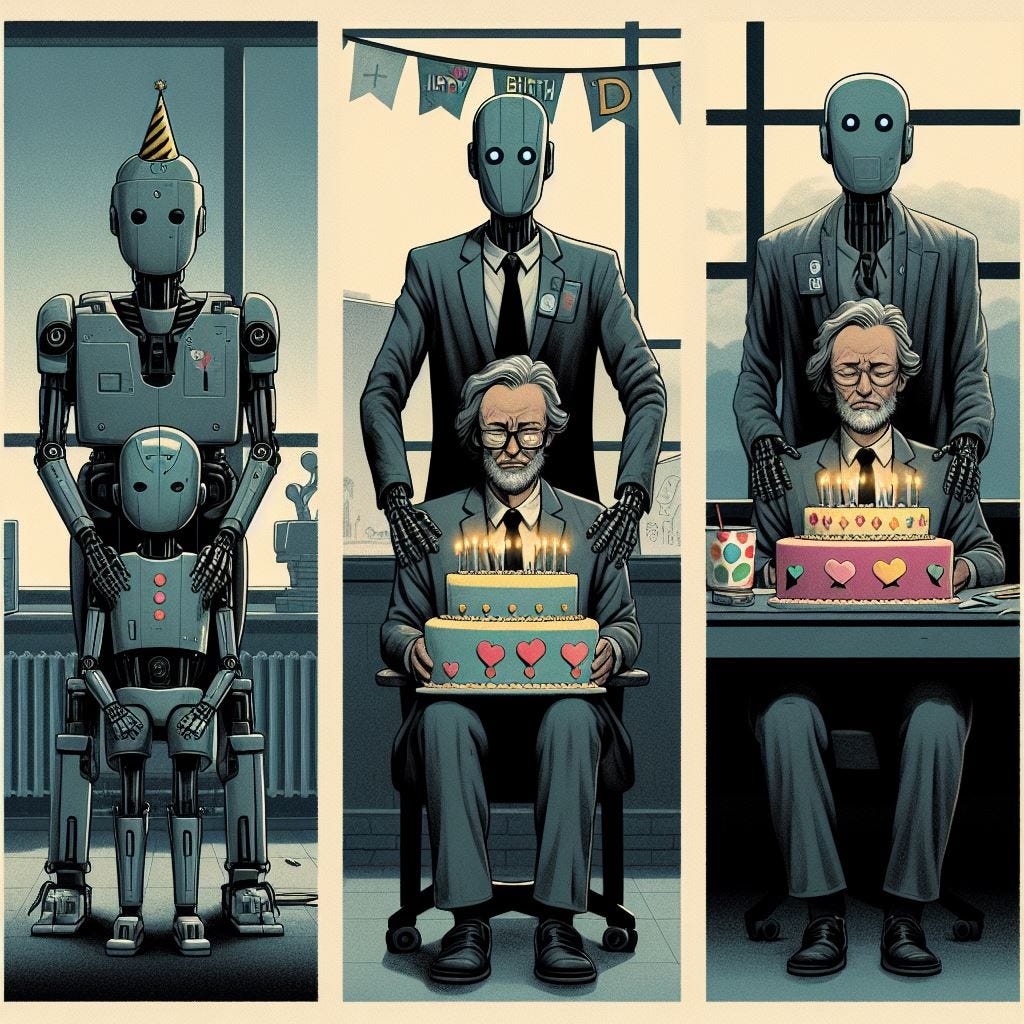

Teachers are not Self-Checkout Counters

Replacing teachers with guides to “coach” students is terrifying. There are clearly massive implications for labor here, but what of learning? When I first came across OpenAI’s Playground in the spring of 2022 and used GPT-3 to generate text, the implication was clear to me that developers would use this tool to ‘fix’ education. What I said then about text generation was writing is not a problem to solve I will now extend to learning is not a problem to solve.

The pressures to address long-standing inequities in education are immense. Students aren’t reading at grade level, or meeting math standards. That’s just in K-12 nationally. Higher education faces increasingly bleak prospects in terms of growth and dwindling completion rates as more and more young people elect to not go to college. But don’t worry, technology is here to solve all of this! Right now, Microsoft is working on using generative AI to help students read at grade level; generative AI will soon be employed “to give students their own instructor, tutor, mentor, or companion, receiving tailored feedback and assistance throughout their learning journey;” chatbots will soon be the norm in our classrooms, allowing students to get education tailored to their specific learning and political beliefs; teachers will be using AI to generate lessons, assessments, even entire lectures. Some of these ideas may help students, but none of them are going to fix the structural inequities and other long-standing issues that plague education.

Technology is a Lousy Predictor of Human Behavior

Leif Weatherby’s recent op-ed A Few of the Ideas About How to Fix Human Behavior Rest on Some Pretty Shaky Science starts to articulate some of the issues we’re seeing with AI in education under the framework of behavioral economics. Under this frame of thinking, replacing a teacher with a generative AI system makes sense if it gives the student greater happiness, improved test scores, and a better chance at success. The problem with such thinking is many of these instances aren’t replicated when social or economic forces come into play. Alpha’s reported success with replacing teachers with AI might not be the same in a struggling public district. It might actually do more harm than good. As Weatherby argues:

Despite all its flaws, behavioral economics continues to drive public policy, market research, and the design of digital interfaces. One might think that a kind of moratorium on applying such dubious science would be in order — except that enacting one would be practically impossible. These ideas are so embedded in our institutions and everyday life that a full-scale audit of the behavioral sciences would require bringing much of our society to a standstill. There is no peer review for algorithms that determine entry to a stadium or access to credit. To perform even the most banal, everyday actions, you have to put implicit trust in unverified scientific results.

Remember back in the early days of ChatGPT when we saw a litany of think pieces proclaiming how it would end the college essay? Well, that hasn’t come close to happening, in no small part because the chatbot interface that most people interact with generative AI is a poor substitute for more humanistic word processors. And while I’m sure students would love using generative AI to converse with the synthetic equivalent of famous characters from history, how do we trust such systems to not fabricate information or amplify cultural biases? When you start unpacking generative AI being used to replace a skill instead of augmenting it, you begin to see how rotten the onion is, layer after layer. There’s still no method for a user to audit a black box system, no reasonable means for an AI to articulate how or why it arrived at a decision. To allow it to stand in for a human offloads not only the labor of a person, but the entire moral, ethical, and responsible thinking we expect from a human being.

We Need an Ethical Framework for AI in Education

The rapid integration of generative AI into education raises profound ethical questions that we have yet to grapple with as a society. Even within education, there is has been little discourse about AI and teaching. While innovations like personalized tutors and auto-generated lessons promise greater efficiency and access, they also threaten to undermine the human relationships at the heart of teaching and learning. This is especially true when they are adopted uncritically. What will become of us if we outsource moral reasoning or emotional intelligence to algorithms?

Students are not problems to be optimized or standardized via algorithm. Our students are complex individuals navigating critical stages of personal growth and development. I fear reducing them to data points risks psychological harm and stunting their humanity. Similarly, the teacher-student bond is not simply as a 'guide;' AI cannot foster character or community in the way a flesh-and-blood mentor can.

Before unleashing these technologies into our classrooms, we urgently need public debates on the values we wish to embed in the systems that influence learners and just how much we want to expose them to these automated systems. An ethical framework for AI in education starts from the primacy of human dignity, the necessity of free will and choice, and the intrinsic worth of every student regardless of measurable achievement. We owe future generations nothing less.

I completely agree. But finding the line between acceptable and efficacious use of AI in schools and classrooms vs. counterproductive use is going to be an enormous challenge, especially if, as Marc notes, the charge is lead by the AI companies and not educators themselves. For that reason alone, it is paramount that more and better AI literacy be made available to K-12 teachers so they can learn what works and what doesn't as far as how AI can assist with teaching and learning. Amidst all the AI hype, I read Justin Reich's Failure to Disrupt: Why Technology Alone Can't Transform Education over the summer. His arguments and evidence should be sobering to the Silicon Valley crowd that believes generative AI is going to be a panacea for education - Reich convincingly argues these claims have been made many times before and schools are notoriously resistant to change. As a longtime teacher, I am not afraid of being replaced by AI but I am nervous schools will have difficulty getting the right balance and resist an all or none approach. I think there can be real value in using AI to help especially some of our most vulnerable students, but the reality is likely to be something very different.

Great essay! I’ve been pondering many of pitfalls you mentioned. I’m curious if you read or heard Dr. Vivienne Ming’s work on the topic?

Here are two relevant samples going back to 2018:

https://socos.org/books/books/how-to-robot-proof-your-kids/

https://www.classcentral.com/course/youtube-future-of-education-i-vivienne-ming-i-how-to-robot-proof-your-kids-i-singularityu-czech-summit-2018-156913