The acceleration of AI deployments has gotten so absurdly out of hand that a draft post I started a week ago about a new development is now out of date. The culprit this time is Google. Last week, Google rebranded their LLM chatbot Bard into Gemini and announced the launch of a paid-for-tier service for users to activate their most powerful language model, Ultra 1.0. I had a blog post ruminating about what it means to have three companies release GPT-4 level language models now: OpenAI’s GPT-4, Microsoft’s CoPilot, and Google’s Gemini. I wasn’t even able to put the finishing touches on that post before Google announced Ultra 1.5!

The Pace is Out of Control

A mere week since Ultra 1.0’s announcement, Google has now introduced us to Ultra 1.5, a model they are clearly positioning to be the leader in the field. Here is the full technical report for Gemini Ultra 1.5, and what it can do is stunning.

Millions of Tokens

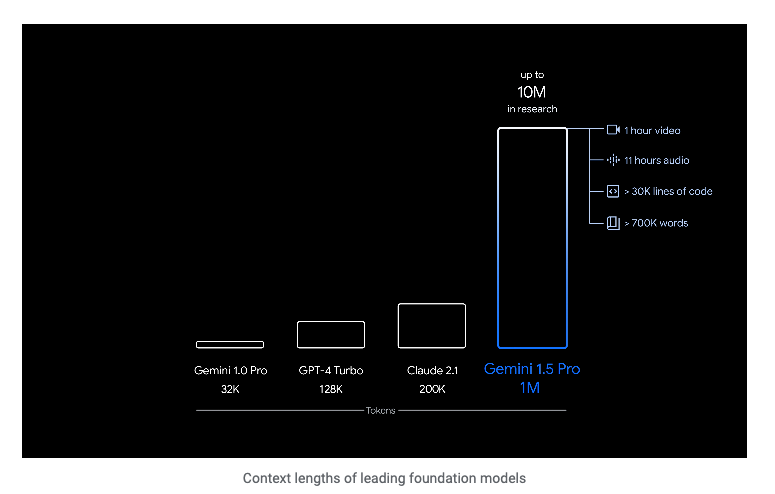

The big news is Google’s commitment to use LLMs and transformers on retrieval tasks across instances. Users will supposedly get up to 1 million tokens with Ultra 1.5 to prompt with roughly an hour of video, 10+ hours of audio, 30,000 lines of code, or upwards of 700,000 words. Researchers will get up to ten times as many tokens to play with.

The use cases for this are profound. Within the announcement, Google included three short demos, and they are well worth watching. The big caveat with all of these hyped announcements is they don’t always live up to the promise.

Why it Matters: Using AI to Talk to Your Data

Google appears to be getting closer to using generative AI to effectively ‘talk to your data’ using Retrieval Augmented Generation. Based on the use cases in the above demos, it’s clear that this use of generative AI could be transformative across society. But we’ve seen promises for RAG from Microsoft with a lackluster results, so I would maintain a high degree of skepticism for now, even with the following:

Gemini 1.5 Pro maintains high levels of performance even as its context window increases. In the Needle In A Haystack (NIAH) evaluation, where a small piece of text containing a particular fact or statement is purposely placed within a long block of text, 1.5 Pro found the embedded text 99% of the time, in blocks of data as long as 1 million tokens.

The Take Away: Things Aren’t Slowing Down

What we so badly need in education is stability and a slowdown in generative AI deployments. Professional development in our field comes in brief snapshots. We might be able to host a handful of lunch and learns, a professional development day or two, or some sporadic workshops, but this model of PD fails completely when generative AI isn’t stable.

This is one area I’ve tried to address by creating the AI Institute for Teachers. Faculty need sustained and committed professional development that isn’t a one-off workshop or Zoom. Such events serve important functions, but without sustained engagement, time, and funding, these short events only serve as the town crier announcing the latest update. I want faculty to do more than be informed; I want them to be empowered to make decisions that honor the dignity of their labor and their student’s learning in this new digital age. That’s only going to come from training.