The Price of Automating Ethics

Why AI Detection Alone Will Fail Students

This post is the sixth in the Beyond ChatGPT series about generative AI’s impact on learning. In the previous posts, I discussed how generative AI has moved beyond text generation and is starting to impact critical skills like reading, note-taking, and impacting relationships by automating tutoring and feedback. This post deals with how generative AI challenges academic misconduct and AI detection’s impact on students. The goal of this series is to explore AI beyond ChatGPT and consider how this emerging technology is transforming not simply writing, but many of the core skills we associate with learning. Educators must shift our discourse away from ChatGPT’s disruption of assessments and begin to grapple with what generative AI means for teaching and learning.

Beyond ChatGPT Series

Note Taking: AI’s Promise to Pay Attention for You

Guilty Until Proven Human

In higher education within the US, academic misconduct is often left at the discretion of an individual faculty member. Each professor is the king or queen of their classroom and often receives little guidance about how to enforce broad policies about misconduct. Having such personalized approaches to academic honesty certainly doesn’t help matters when it comes to generative AI and the utter lack of consensus on how the tool should be used by students. This lack of a unified or centralized direction is frustrating for educators, but it is also absolutely maddening for students who often struggle to understand what AI is outside of a tool like ChatGPT.

Faculty Need AI Literacy to Understand AI Detection

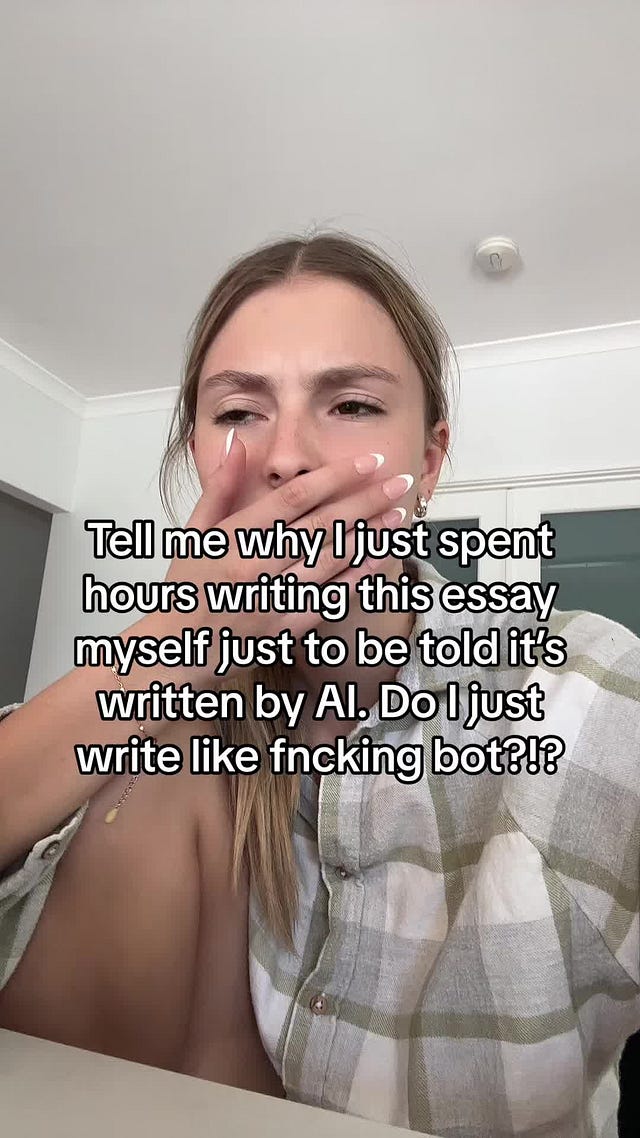

Many institutions adopted unreliable AI detection early on, and thankfully, some have now switched it off. However, it is clear that many still use it and it is harming students. Log into Instagram or TikTok and you will find plenty of stories from students claiming they were falsely accused of cheating using ChatGPT or other AI tools and cannot find a way to prove their innocence.

Tiktok failed to load.

Tiktok failed to load.Enable 3rd party cookies or use another browser

Marley Stevens’ experience using Grammarly’s widely acceptable editing features and setting off Turnitin’s AI detector is just one case of a student claiming an AI detection falsely identified them. Each story is tragic and 100% preventable. However, most institutions don’t have the capacity to teach faculty ethical AI usage, which robs students of the opportunity to use the tools transparently.

Self-Surveillance is a Terrifying Dystopia

The fear of being wrongly accused has forced some students to extreme measures of self-surveillance. Keeping careful logs using technical features of the software they used to write as evidence that the text they submitted was indeed their human writing. How would you feel being a student and having to write in specific software out of fear another software would accuse you of cheating? That’s exactly what many students are doing as they turn to Google Docs for timestamps of their writing.

Tiktok failed to load.

Tiktok failed to load.Enable 3rd party cookies or use another browser

I’ve written before about GPTZero’s troubling attempt to pivot from the AI detection industry into using self-surveillance to “Human Mark Your Writing,” where users upload their writing and the company certifies that it was written by a human and not a machine. This poses deeply concerning questions about user autonomy and privacy. Why should we be asked to surrender our data and writing to an opaque, proprietary system? What's to stop such a system from potentially repurposing that data for other aims, like training future AI models?

Putting aside privacy concerns, GPTZero's premise is also flawed from an academic integrity standpoint. Such a system assumes there is a clear line that can be drawn between "human" and "AI-generated" writing. Just as in Marley’s Grammarly example, most student writing exists on a spectrum—using generative AI for tasks like outlining, research gathering, revision assistance, and even co-writing. Enforcing a crude "human vs AI" binary does little to advance the conversations we need about how to responsibly integrate generative AI as a tool to help writers.

Ultimately, schemes like GPTZero's "Human Writing Report" represent a terrifying path toward a self-surveillance dystopia. One where students constantly have to earn validation of their work via algorithm, hoping that it clears arbitrary thresholds of "human-like" quality. This robs students of personal agency over their own writing and learning journeys. It is an unsustainable and unethical model for long-term educational practices.

Fear is undeniably the driving force behind some students' personal decisions to adopt self-surveillance measures like meticulously logging their writing process. However, when a company or organization promotes always-on surveillance as an option or solution, it may represent a form of control over users. Microsoft recently announced their new AI-enabled PC feature dubbed Recall that takes screenshots of your desktop every few seconds, recording your every digital move, and uses AI to track your steps. The company’s pitch to consumers is simple—AI doesn’t know enough about you to support the tasks you’d like to automate. Adopting such a system gives the AI a much clearer picture of how you interact with your data. A user won’t have to worry about vaguely prompting the AI about an email or spreadsheet—the algorithm has tracked it and accurately predicts what you want from it.

Microsoft promises that your data is encrypted, but we know from emerging research that AI systems aren’t entirely secure. It’s easy to see how some would view a system that monitors your every moment as a solution to our current problem of asking students to use AI ethically and transparently. Simply submit screenshots using a bespoke service to certify what tools you did or did not use on your assessment. Of course, you do so at the expense of your privacy and autonomy.

Google’s Watermarking Tool

Google isn’t getting the same press for their generative AI products as OpenAI and that’s a shame because what they recently announced may change how people use the technology. Pivoting away from AI detectors and self-surveillance, Google is now committed to watermarking all AI outputs using a program called SynthID. The program is in Beta now, but we’re likely going to see more widespread adoption, and challenges, to generative outputs because of watermarking.

How Watermarking Works

Watermarking isn’t the same as using an AI detector to try and guess if a response is generated vs composed by a human. Watermarking uses cryptography to encrypt each output with a pattern of coded words undetectable to humans. You can’t easily break it. You can change sentences, even words and the watermarking will remain. But it is far from foolproof. Since there is no universal standard for watermarking, SynthID is a model specific to Google—it won’t work with OpenAI or Microsoft or scores of other 3rd party generative AI models, but it is a start in the right direction in establishing accountability and transparency for ethical usage of AI.

The Challenges of Watermarking in our AI Era

The idea of being able to tell when a video, picture, or bit of audio was machine-generated is likely going to be a crucial feature in combating deep fakes, misinformation, disinformation, etc. We’re going to need some type of system to separate the synthetic vs. genuine content. But watermarking isn’t a one-stop solution and its launch may have downstream effects we haven’t thought about.

Imagine being asked by your boss to complete a task. You use Google’s AI tools to create a slide deck, part of the text for a talk, or even a short video marketing something. Your boss is pleased, but instead of paying you for the entirety of your work, your boss only wants to compensate you for 60% because you used AI and the watermarks show this.

Or flip the scenario. Your boss has been on you to complete your work faster and be more productive, but you did not use AI and instead rely on your human skills to complete your tasks. During your performance review, your boss notes there are no watermarks in any of your work and threatens to deny you a promotion on the grounds you don’t use technology in a way that is most productive for the company.

There’s no ethical playbook for how people will greet watermarked generative outputs. AI functions in our world under the illusion that a human being created it, not a machine. When you take that away, you risk alienating your audience, even insulting them by embracing a tool to do your communication for you. All it takes is one tweet dragging a company’s press release through the gutter to make industries hesitate, even ban AI.

Students Can Easily Bypass Watermarking

A single company committing to watermarking text probably won’t end students using generative AI in academically dishonest ways. Text is fungible by its nature and without a universal standard for watermarking outputs everyone agrees on, all someone needs to do is run Google’s output through an open model to break the encryption. Even if we somehow agree to come together with an international treaty and have all nations agree on a watermarking standard, this solution could still ultimately fail because it relies on a single cryptography key to decode the encryption. All it takes is one leak for the key to become public and the whole house of cards comes tumbling down.

And frankly, I shudder to think how some would use a generalized watermarking scheme with students given the recent examples we’ve seen with AI detection. We need more equitable and thoughtful means of establishing provenance in digital writing that ensures personal agency and autonomy.

Teachers are in a unique position to know this phenomenon all too well. We’ve been on the receiving end of students attempting to pass off AI-generated content for the past 18 months. It feels awful when a student turns in a personal reflection that was generated by AI. A student author takes a genre of writing that is supposed to be uniquely personal and uses a machine to try and pass off their learning.

Making matters worse, the trust between teacher and student is now tested, strained, and often broken in the process. Many institutions don’t have the capacity or resources to embrace restorative practices in academic integrity. The ethical breach is not repaired and the damage done to the relationship and the community is not addressed. The cycle continues, leaving both teachers and students increasingly frustrated.

We Cannot Continue Outsourcing Ethics to a Machine

If generative AI is truly good at one thing, it is tearing down the facade of a given practice and revealing how shoddy the foundation was to begin with. ChatGPT made many in education confront writing practices that produced rote outcomes. AI detection has done the same with how little work we put into teaching students what it means to use these new tools ethically. The last 18 months should send a clear message that teaching ethical behavior isn’t something we should automate.

It will take years of practice and failures big and small before we can teach human beings how to use this new technology in transparent ways. Doing so will start with students and it will take patience. Students will push boundaries. They will test our limits. And, yes, many of them will use these tools to offload their learning in academically dishonest ways. We won’t curb that by tasking a machine to automate enforcement of ethical standards—we must teach it. There may be room for some combination of the detection tools discussed, but can we say academia as a whole has adopted the use of these tools any more critically than the students we fear will use AI to cheat the system and avoid learning?

Great essay! I would add that it isn't just tools also methods. We can champion all kinds of alternative lower-stakes assignments, and they may help, just as some tools may, but there is no substitute for ethical judgement, critical thought, and empathy on the part of a teacher who cares both about the subject and the student..

I believe this paper came out before ChatGPT was released to the public in the Fall of 2022:

https://drsaraheaton.wordpress.com/2023/02/25/6-tenets-of-postplagiarism-writing-in-the-age-of-artificial-intelligence/

The part that jumped out at me was this tenet: "Hybrid writing, co-created by human and artificial intelligence together is becoming prevalent. Soon it will be the norm. Trying to determine where the human ends and where the artificial intelligence begins is pointless and futile." I've thought about "pointless and futile" a lot and posts like this make me wonder if all the focus on AI detection will ultimately be a giant waste of time. And yet, from an instructional standpoint, we must be prepared to help students with the basics before they learn where AI may or may not help them in their writing process. The next few years will continue to be a wild ride for writing teachers as we try to crack the code on what works best and what doesn't, all while newly released models are continuously introduced which will challenge us to constantly refine what it all means for students. But I know that students are desperate for guidance and an adversarial approach revolving around grades and assessment is not the most fruitful path.