We Need to Reclaim Slowness

Since OpenAI released ChatGPT in November of 2022, countless companies have followed suit, releasing their own flavor of generative AI to the public marketing their tools as part of a grand public experiment. The features you can access for the $20 plan for GPT-4 are better than anything the government has—there’s no super secret AI in development at the Pentagon. For the first time in history, the general public has access to the most advanced technology, not the military or private industry. You and your 85-year-old neighbor can access the same features through GPT-4 as an analyst studying misinformation for the NSA. No one is sure what the exact consequences are for society.

Google’s “Help Me Write” Comes to the Chrome Browser

You can now use generative AI in any interface—all through Google Chrome’s browser. Simply activate the “Help Me Write” feature, and you are one click away from adding generative text to any web interface. It’s another inflection point, but we’ve already had so many that most people likely won’t notice. After all, the market is so saturated with generative this and that, why pay attention to yet another feature? Well, this one matters. In our cloud-connected world, having a browser-based AI that’s always available, scanning whatever web page you are on, and scrapping all of the data is a security nightmare.

In Substack

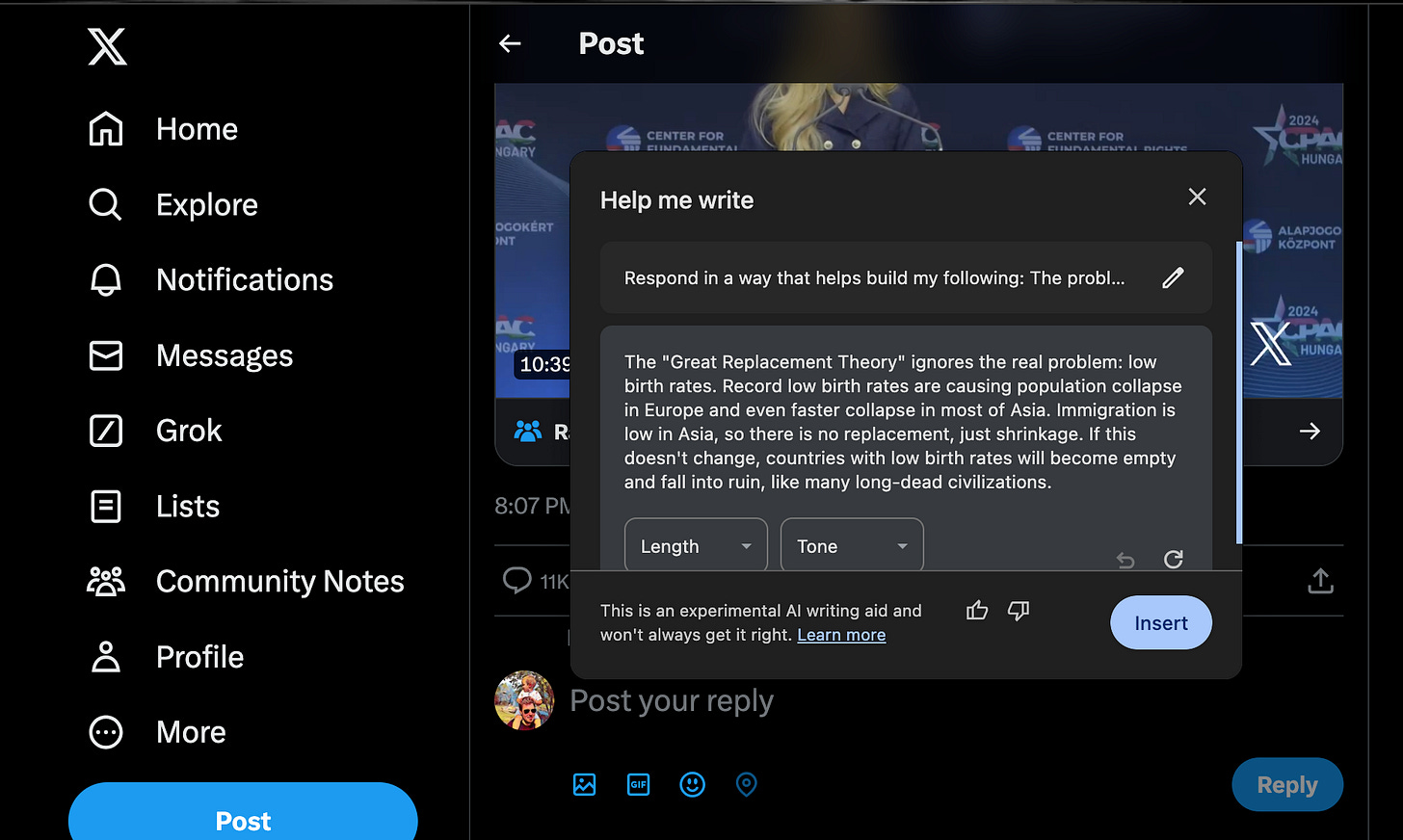

Responding to Elon Musk on Twitter

I wish I had an answer for how we deal with this in our classrooms, but I don’t. I don’t think anyone has a clue how to handle browser-based AI in countless businesses that work with sensitive data in our cloud-connected world. The balanced view is that such a feature gives users easier access to generative features on-demand. This could help people complete simple tasks efficiently that are often mundane.

My students can use it to complete their assignments in Blackboard in a faster amount of time than it takes to copy and paste a prompt into ChatGPT. With a single click, the AI reads the assignment prompt and gives them a short answer—they don’t even need to copy the prompt. I know many will become quite upset about this, but that’s not what scares me. As an educator, I can then click on a student submission and do the same thing to offer them feedback using AI from my browser. Not only is this an ethical dilemma for faculty—it’s also a major FERPA issue as the student assignment is scanned into the AI.

Student Submission

Faculty Feedback

Our Obsession With Saving Time

I can write a few sentences of simple feedback generated by AI in seconds, but it takes me longer to process that student’s submission and put it in conversation with the broader learning outside of the assignment. The AI doesn’t know what the student did in class, how they contributed to the conversation, or how what they wrote added to those earlier insights. It simply generates a competent response that uses dozens of words to say very little.

What was gained here? Using generative AI in the assignment submission and feedback meant neither the student nor the teacher had to think. I don’t have to repeatedly engage in what becomes exhausting levels of feedback. A student doesn’t view the assignment as learning, simply another task to mark off as complete before going about their day.

This focus on saving time goes well beyond education. Wendy’s announced last year they were experimenting with replacing fast food ordering with generative AI. That pilot program is expanding and rolling out across the nation.

On average, Wendy’s said using generative AI saved about 20 seconds of ordering time vs. using a human being. It is also far cheaper to replace a person with automation. This practice will likely spread to other fast-food industries across the globe. How many hundreds of thousands of jobs will be replaced, all because a machine is 1/3rd of a minute faster at completing a task than a human being?

Learning Is About Friction

What generative AI gives to the user is a frictionless experience. Regardless of what you ask a chatbot, the response is always instantaneous, confident, and reasonable sounding. Users trade speed over accuracy and cede their critical thinking to a technology they likely don’t understand, just that it quickly gives them a response.

The current chatbot interfaces can be used to help students learn, that’s undeniable. We’ve seen hundreds of use cases for the tech in the classroom, but nearly all of them ask the user to pause and add friction to the overall experience. After all, learning is friction. Using AI in learning cannot be so lightning-quick that a user doesn’t bother to examine the output or take ownership of it. And this pedagogically puts education at odds with the current wave of frictionless chatbot interfaces dominating the market.

I fear education is setting itself up for defeat by embracing a strategy of introducing friction into a technology that does not allow for slowness, uncertainty, or critical examination. This battle must be waged outside of the classrooms in the critical design arena. We need to advocate for slowness, for moments of built-in inquiry so that users have to pause and examine an output generated by this technology. Only then can we unlock the transformative potential of this technology in education, fostering an environment where students and teachers alike can harness the power of AI while preserving the those skills of analysis, creativity, and intellectual curiosity that define the human experience.

The generative AI backlash is definitely upon us - judging from my anecdotal impression, two-thirds or more of the recent think pieces on AI skew negative. And with good reason. No one can keep up. With respect to education, Marc's point about the "frictionless" experience is exactly right. I am trying to find a way in to help my students use AI in their writing process but what comes back every time is they don't know enough about writing to use the tools effectively. It's a circular loop. Any AI generated feedback is simply too anodyne and generic to be of much help beyond the very basic contours of organization and sentence structure which we've had with grammarly for years. I've gotten better results with outlining, but writing should be hard, especially for novices. I'm not sure how "better AI" will be able to solve the issues Marc references ("it doesn’t know what the student did in class, how they contributed to the conversation, or how what they wrote added to those earlier insights") unless we have an AI transcript of every class discussion and even that would not capture all the nuances and interactions you have with students when discussing their work. In short, to get students to use AI effectively, we have to model it for them all while we are still figuring out how to do it ourselves. I still would like to see a place - either online or in person - for an AI symposium of experienced educators who are engaged in this process. As for this recent upgrade or tool which allows AI to be constantly "on", fortunately most of my students are not saavy or interested enough to use it. Yet.

I love your idea of reclaiming slowness! I’ve tried to do something similar in my classroom with deeper thinking.

https://open.substack.com/pub/adrianneibauer/p/philosophia?r=gtvg8&utm_medium=ios