Your Students Are Already Using AI You've Never Heard Of

Far too many people view the free version of ChatGPT as a measure of what generative AI is capable of, and it is providing many in education with a false sense of reality.

Since OpenAI launched GPT-4 last year, hundreds if not thousands of third-party apps are using the most advanced multimodal model’s API to sell students on text generation, automated lecture capture and note taking, reading and research assistance, and using GPT-vision to scan questions using their mobile devices. These are all things the public version cannot do very well. The challenge of raising people’s awareness of what the baseline capabilities are for generative AI is a constant struggle of ever shifting goalposts.

Google’s Gemini Ultra 1.0 is here (bye Bard), and Ultra 1.5 has already been announced. OpenAI just announced Sora, their text-to-video model, and will likely upgrade to GPT-5 soon. Microsoft rebranded Bing’s chatbot as Copilot, powering it with their own version of GPT-4. Anthropic is raising a phenomenal amount of capital for their own models. And these are just the big companies—hundreds of others are developing their own bespoke AI services, like Adobe’s recent announcement to natively include generative AI in its PDF reader to automatically summarize documents.

Why Free ChatGPT Is A Problem

Most people don’t spend hours each week plugged into the most up-to-date news about AI. The hype around ChatGPT meant most educators became aware of it quickly, and the low burden of access through a free-to-use simple chatbot interface meant most folks had easy access to try it. Therein lies the rub—many who tried ChatGPT’s free 3.5 model found it utterly unimpressive in what it could produce. The writing was always generic and boring, the citations filled with made-up bullshit, and hallucinations abound.

This creates a false sense of what the technology is actually capable of. Here’s what I suggest, sign up for a free account with Microsoft Copilot and test GPT-4. If that doesn’t impress you, then sign up for a free trial of Google’s new Gemini Advanced model using Ultra 1.0 and test its features. Spend some time to explore. Don’t give up once you encounter something you don’t completely understand. Poke around. Use your skills to write better and more detailed prompts. Doing so will help you judge what these newer models are able to do.

Those are the free options that give users access to the most advanced models now. If you can afford it, spend $20 for at least one month to access GPT-4 and see what customized GPTs offer and how advanced the model is compared to ChatGPT’s public version.

A Snapshot Of What You Can Do With Paid Models

Let’s take a tour of how different the experiences are using some of these features native to paid versions of the models. I’m only going to focus on research and finding sources for a lit review. There are dozens more use cases.

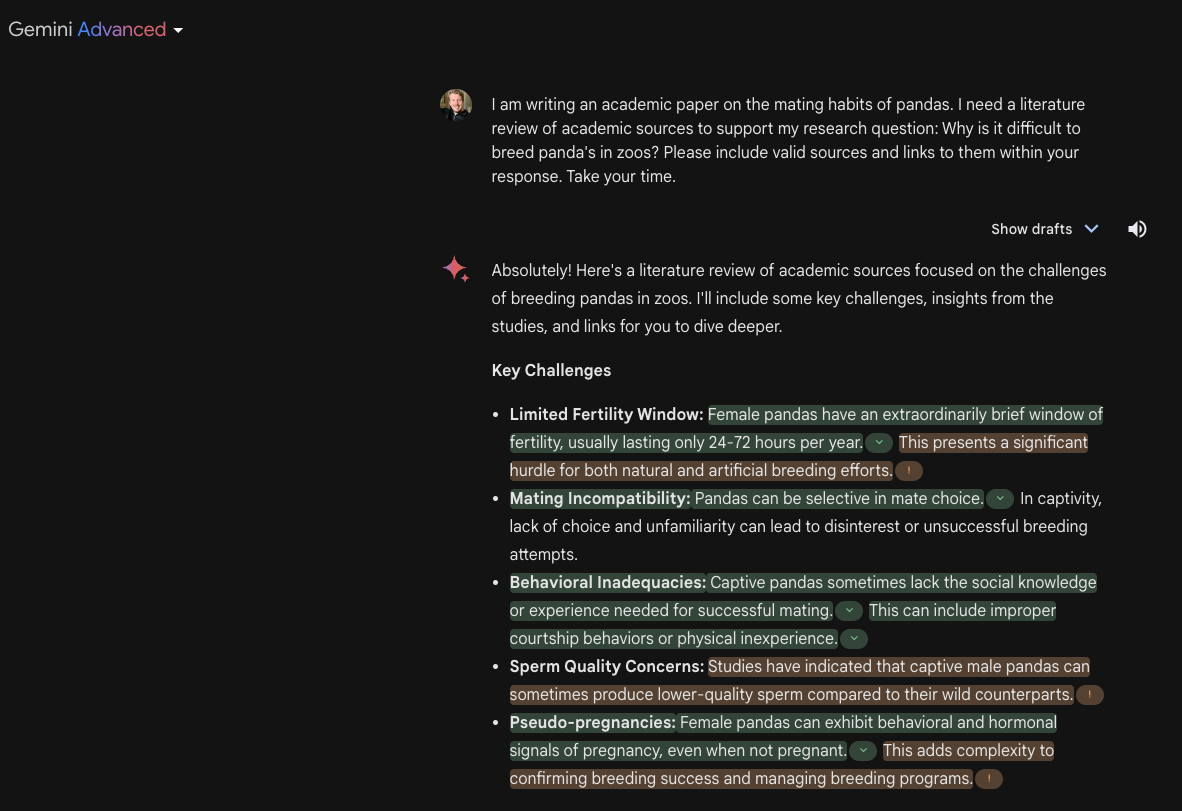

Finding Valid Sources—Gemini Advanced

Google’s Gemini has a built-in feature that uses Google search to double-check the output given by Gemini. In this case, the green sources led back to valid and credible sources that I could click and double-check, while the tan sources could not be fact-checked.

Finding Valid Sources—Microsoft CoPilot

Arguably, Microsoft CoPilot is even more effective in finding valid sources to populate a lit review. It not only sources each response but includes a visual link to let me know what a literature review is!

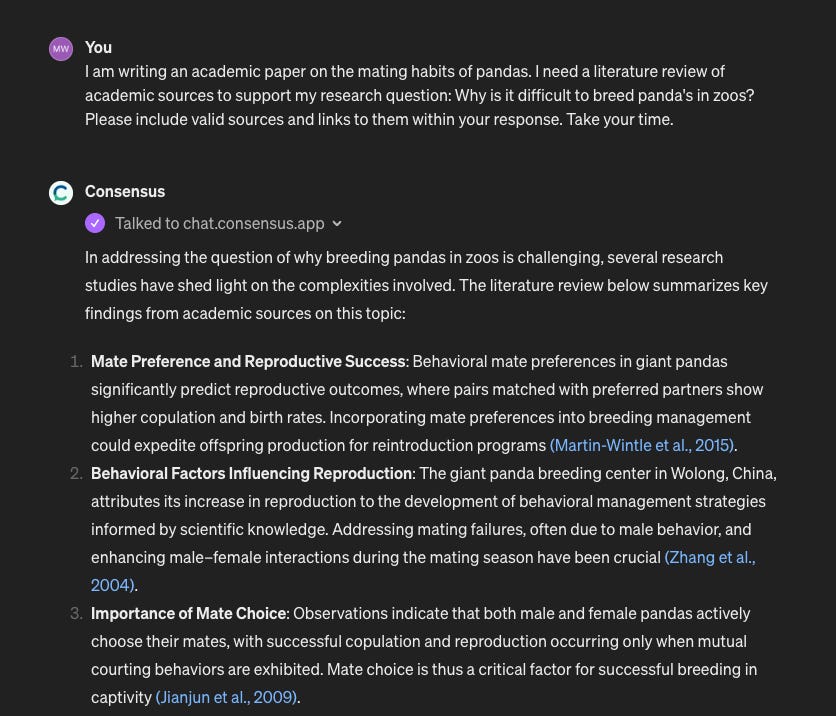

Finding Valid Sources—GPT-4 using the Consensus Plugin

The paid-for version of ChatGPT using GPT-4 gives users powerful access to hundreds of plugins. Consensus is one of the most powerful research plugins in OpenAI’s GPT store. It allows a user to search academic journals and summarize them within the output. Each one of these is a stub that I can then further expand.

There is No Static Curriculum

There are some really great curriculum options being developed to help educators navigate generative AI, like Stanford’s recently announced CRAFT, but there’s a fundamental problem virtually none of them address—without stability, none of the material outside of the most basic literacy will be applicable for long. We should all expect radical changes to be common over the near term, and this complicates the long-term outlook for how average people, not just early adopters, will actually use these tools.

Yes, we can update training material and curriculum about AI’s capabilities with more money and more staff, but this isn’t solely a resource problem. It is an attention problem. Few have the capacity to continue doing their normal jobs while maintaining up-to-date knowledge about the latest upgrades and capabilities or the implications for this means for their teaching or their student’s learning. Expecting people to do so asks them to live on the edge of a technological frontier that calls upon them to learn and relearn skills to keep pace with the latest announcements. Who wants to live that way? This means that instead of adopting AI en masse, most people will disengage and return to using their existing skills.

Strategies That Go Beyond Our Moment

Addressing the challenge of raising awareness about the baseline and advanced capabilities of generative AI, especially in the context of education and broader public understanding, will need more than a single strategy. We need to bridge the gap between perceptions formed by initial interactions with free AI tools, and the realities of what advanced AI models can do, particularly when leveraged through paid services or specialized applications. We need to begin exploring some more ideas in depth that address some of the following:

Updated Training Experiences for Educators

Access to Advanced AI Models

Integrated AI Literacy Across Disciplines

We are all Test Subjects in the Biggest AI Experiment in History

It’s easy to get lost in the absurdity of our current moment. Many companies have rushed to upgrade their existing offerings with generative features in real-time without clear use cases or bothering to work with education to build the needed capacity to train future users. It’s important to keep in mind that all of these generative tools are labeled as experimental, and we are the test subjects. No single company has a clear understanding of how users will adopt generative AI or long-term plans to upskill employees to keep them abreast of the most up-to-date features. Human beings need time and familiarity before they truly adopt something as part of their daily practice.

As I’ve written about previously, until the constant changes in interfaces and underlying capabilities of language models slow down, most users will never adopt generative AI as part of their daily workflow. Without a protracted period of stability, generative AI will remain a novelty for most, while early adopters reap untold benefits.

This is a huge problem and I don't see any solution in sight the moment. Already, many people I've spoken to who were excited about genAI even 6 months ago have either lost interest or are overwhelmed with the explosion of new tools geared toward the education market. On another note - why so little coverage of the absolutely crazy glitch on GPT-4 a few days ago? For about 12 hours, GPT-4 was spewing absolute nonsense and totally off the rails. I have several chats of total gibberish in response to normal prompts. I've seen nothing in the mainstream press about it - OpenAI claims they fixed it, but the truth is they have absolutely no idea what happened. That only makes it more difficult to convince AI skeptics to have confidence in these models. My anecdotal observation as a heavy user of GPT-4 is that it has gotten worse over the past 6 months. Hard to pinpoint why, but the results I've been getting have been much less impressive, shorter, and not nearly as innovative as I've gotten previously.

Yeah no one is talking about that meltdown. Oh well... this thing we are all coming to rely on and want to integrate into schools suddenly breaks down completely, and OpenAI just says No comment. Word on the street is that GPT 5 testing is happening right now inside GPT 4. Some users are experiencing some breakthrough results, others diminishing returns, and then this breakdown. We shall see what comes of it all.