AI Instructional Design Must Be More Than a Time Saver

This post is the seventh and final essay in the Beyond ChatGPT series about generative AI’s impact on learning. A recap and next steps is forthcoming. For this final post, I’m going to focus on modeling ethical usage of AI with students by being transparent when we us AI in instructional design. Few seems to be doing this in education.

Beyond ChatGPT Series

Note Taking: AI’s Promise to Pay Attention for You

AI Detection: The Price of Automating Ethics

We dropped my daughter off at sleepaway summer camp for the first time a few days ago. No phones, no devices, just nature. Yet my wife and I hover around our phones each evening using the app the camp sent us, eager for the daily deluge of photos they upload each night. We don’t have to scroll through hundreds of images—the app’s facial recognition software recognizes our daughter’s face and tags her in photos. The campers don’t get email, but we can click the “letters” tab in the app and write to her. The camp counselors print off the letters each morning. While going to summer camp remains a singular technology-free experience for my daughter, our view into her time at camp is one completely mitigated by digital technology.

We Need Transparency When Using AI in Instructional Design

Students are confronted by a slew of generative tools that promise them amazing time-saving shortcuts in so much of their learning and now educators will likewise be greeted with the option of generating learning materials each time they use a digital tool. The experience of learning was already highly influenced by technology. Some appear eager to take the next step and offload as much of the design process as they can.

Tiktok failed to load.

Tiktok failed to load.Enable 3rd party cookies or use another browser

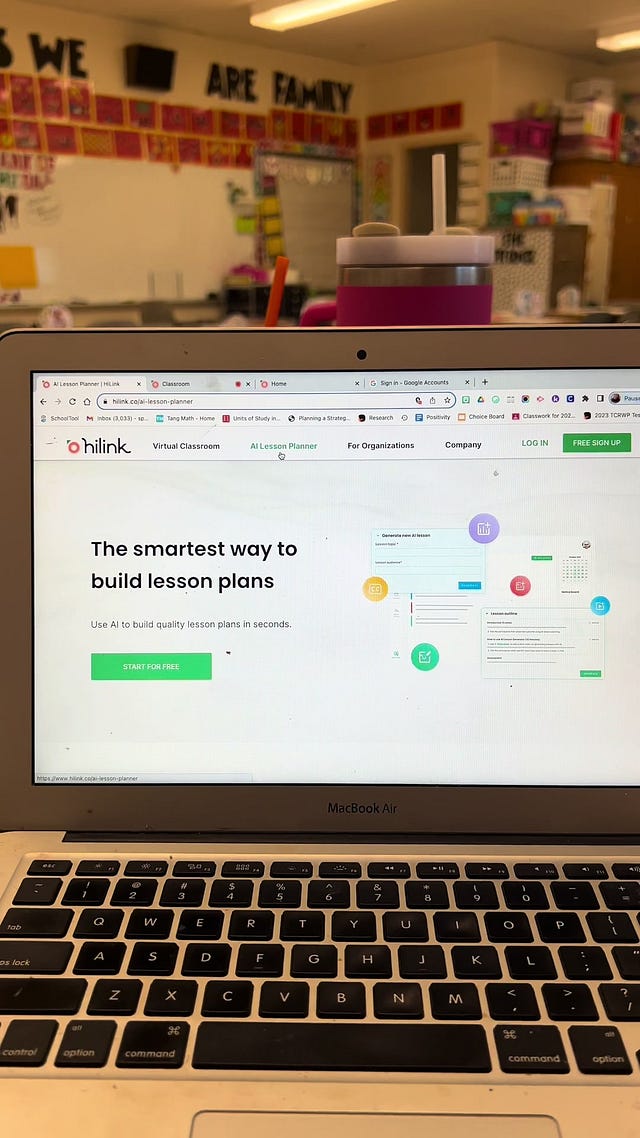

Generative AI is now freely available to teachers on far too many platforms to list. I can create lesson plans using Microsoft’s Copilot for education, generate rubrics using Blackboard’s AI Assistant, and even create bespoke IEP plans using Khan Academy’s free Khanmigo for teachers. None of these come with a rule book or best practices. They simply show up one day on your socials or in your inbox and invite you to use them.

What ethical framework should we require of ourselves and ask of our colleagues each time they are confronted with the option of generating teaching materials? More pointedly, how can we as educators avoid the trap of asking students to be honest and transparent each time they use an AI tool if we do not expect such transparency ourselves?

Digital learning is its own industry and many of us find ourselves busily working inside learning management systems or using various edtech tools our institutions bought for us with little regard to how students interact with the materials we design. I began teaching online long before standards for distance education were readily available through organizations like Quality Matters. As a grad student, the sum of my training with learning management systems involved a 45-minute “getting started” session. Programming has come a long way since those days, but the pandemic showed how many institutions weren’t prepared to support their faculty with the rapid shift to remote teaching and my fear is we’ve learned little from this with the new wave of AI tools.

Time Is The Problem, AI Is Sold As The Solution

What we see across social media are teacher-influencers talking up scores of different AI tools, and selling them as time-savers to generate learning activities for their students. Educators are overworked, underpaid, and faced with ridiculous expectations, so why not use a free AI tool to help?

Tiktok failed to load.

Tiktok failed to load.Enable 3rd party cookies or use another browser

I haven’t come across a single influencer talking about using AI critically for instructional design. All too often, the selling point is in the veritable “magic” of using the tool to generate an activity or lesson with the utmost speed, and little time or regard is given to the accuracy of its output. To be clear, I’m not blaming teachers. They’re targeted by these companies in a similar manner that many of the AI tools discussed in this series target students.

We’ve ceded so much trust to digital systems already that most simply assume a tool is safe to use with students because a company published it. We don’t check to see if it is compliant with any existing regulations. We don’t ask what powers it. We do not question what happens to our data or our student’s data once we upload it. We likewise don’t know where its information came from or how it came to generate human-like responses. The trust we put into these systems is entirely unearned and uncritical. Seeing many teacher influencers using AI to create lesson plans on social media is jarring. Many don’t look like trained subject matter experts using a tool to augment their existing skills. Instead, some look like actors in a movie suddenly announcing they know how to fly a plane, and the bit is so played out that the audience knows well before the plane crashes that this supposed skill is more boast than reality.

What Uncritical AI Adoption Means For Teachers

The allure of these AI tools for teachers is understandable—who doesn't want to save time on the laborious process of designing lesson plans and materials? But we have to ask ourselves what is lost when we cede the instructional design process to an automated system without critical scrutiny.

Sure, an AI tool can cobble together information from its training data into a competent output. But an AI lacks the true subject mastery and insight that experienced teachers bring. A human being brings personal and nuanced examples, not a predictable average. We rely on our critical thinking and ethical decision making to unpack difficult concepts and understand how topics connect—something AI just can't replicate. The risk of offloading these skills uncritically to AI means we’re left with watered-down, decontextualized "lessons" devoid of an instructor's enriching knowledge.

Effective teaching requires intention— aligning objectives, assessments, and activities in a cohesive plan. AI struggles with this and requires a user to align these areas using their own judgment. This takes a great deal of time and human skill. Generative tools can create activities, but AI cannot grasp the pedagogical foundations to purposefully scaffold learning toward defined goals. That’s a teacher’s job. The risk of using these tools uncritically as time savers is students end up with a disjointed collection of tasks rather than a structured experience.

No AI tool truly can account for who your students are. Only skilled teachers can design lessons centered on their specific students' backgrounds, prior knowledge, motivations, etc. Used intentionally in this way, AI can certainly help augment a teacher, but again, that takes time and friction. AI alone can't analyze and adapt to a classroom's dynamics like a human being can. If we use AI like this, we'd be stuck with one-size-fits-all instruction disconnected from those human realities.

One question that keeps arising in my mind throughout this series is where is the line we should draw when using an AI tool? We shouldn’t shame someone for using AI. That’s certainly a hard line for me. However, what does it mean if a person uses AI with no intention of being transparent about it? To me, it suggests an assumption that’s grating for some— AI feels like cheating, so let’s hide it. This phenomenon isn’t specific to education. Many industries that adopted AI have done so with no interest in transparency in how the tool was used.

A teacher using AI without acknowledgment may be a breach of trust between their position as an authority figure and their students. If we truly care about academic integrity, then we should model open usage of this technology. How can we demand transparency and honest work from students while gleefully using our own AI shortcuts behind the scenes? Doing so would perpetuate the very lack of integrity our instruction is supposed to counteract.

We Should Shape Our Future, Not Technology

Our lives are digital now. To someone living 30 or 40 years ago, the systems we interact with each day might as well be magic. We order our groceries via app and are perfectly comfortable having complete strangers pluck goods from shelves for us. We arrange haircut appointments on our screens and get checked in online before dentist appointments. We get phone calls from our pharmacy with robotic voices telling us our prescription is ready. We meet via video conference any where, any time. Some of us have been in taxis with no drivers, and many more have ordered food from delivery robots. All of us have yelled at, cursed out, and been made to feel foolish by so-called smart assistants in our kitchens and living rooms.

Information moves faster, often unseen and unnoticed than when we were younger. We’re used to getting answers now instantly, solving problems with the fewest clicks possible, routes calculated on roads we’ve never traveled before dance before our eyes with arrival times calculated to the minute. No wonder the marketing for AI tools promises more, because we’re accustomed to living in the era of ever more.

But AI isn’t magic. It’s prone to make errors, many of which are difficult to spot because of the speed of generation and the trust a user invests in the technology. The bias that an output contains can be overt, but also subtle and difficult to identify without critically engaging it. There’s also no way of auditing a response because generative systems have no mechanism of understanding where an answer originated. Truth or falsehood isn’t in the generative cards—only an aggregated prediction of what the system statically guesses the correct answer might be. And AI often does guess the right answer, making users more likely to trust an output and stop spending the time needed to question it.

See the Sales Pitch for What it is—Marketing

Why would we question the promise of this technologically inclined future of ever-available, always-on machines that make tasks easier? Many of these companies sell AI as a means to an end to all of society’s problems. A tool to ensure human flourishing. The problem is we have ample evidence that we aren’t flourishing with the current technology we have and this should make us pause and consider the limitation of these promises. Social media has made us more distant and siloed from one another. We date via algorithm now. Tour houses we imagine raising children in via virtual agents. Spend hours each day scrolling through information via dozens of apps on our smart devices. Is this flourishing?

If AI is going to actually help teachers it needs to be more than a time-saving mechanism to offload labor. It must be used critically and thoughtfully. Offering educators shortcuts for their work is a recipe for devaluing human labor and risks diminishing teachers’ roles from engaged and empathetic mentors into passive mouth piece’s for large tech companies' products. Let us use technology with intention, or we risk being used by the same tools.

We didn't receive any updates one evening from my daughter's camp. The app was silent. They were too busy having fun to provide us with a digital update. My wife and I were forced to stop helicoptering our daughter's experience and this made us both extremely anxious. But I went to sleep trusting that she could have a good time and grow, even if I wasn't invited to track her movements for one night.

What I have found is that, contrary to saving me time, good use of AI in connection with lesson planning is actually more time consuming, but leads to higher quality. In other words, my best use of AI as a teacher results in something far superior than I would have been able to do otherwise, but still takes more time. I've been using it less and less over a wide range of tasks, but more deeply and deliberately on those tasks I do use it for.

I just wrote about the time issue relative to lesson planning. Teachers get a scant 4-10 paid minutes to plan a lesson. Hardly enough time to think critically about what you put in front of students. https://open.substack.com/pub/verenabryan/p/teacher-math-part-ii?r=a6rh&utm_campaign=post&utm_medium=web